Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs.

How Audiences Play "The Fire in the Storm"

Our team hacked together an interactive journey driven by microphone inputs, bringing together the audience, performer, and experience. Here's how it worked.

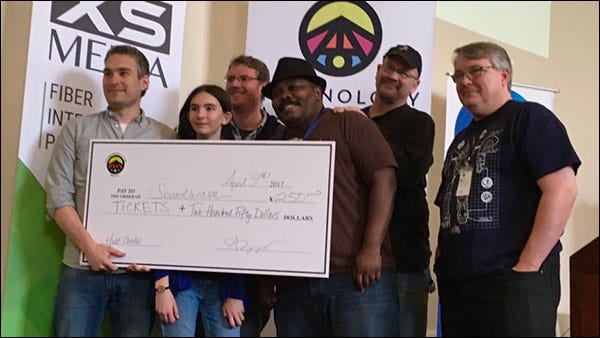

Hack for a Cause is an annual, weekend event in Eugene, Oregon where teams try to improve social and civic issues through technology. Our five-man team took on a challenge to grow and engage new audiences for our city's performance center. We won by presenting "The Fire In the Storm," a visual exploration of universal themes driven by vocal performance and audience participation. Using audio analysis, interactive art, and a wordless story, we brought together a crowd of strangers for a few wonderful minutes.

To the best of our knowledge, this has never been done before... not like this. And in the spirit of open source knowledge, here is how it worked.

AUDIO INPUTS

Our first step was to identify the inputs we had available. Initially, it was pretty raw: an array of floating point values delivered by the microphone buffer. But using open source code, we could determine loudness and a spectrum of pitch values. When these three inputs (raw, loudness, and pitch) were connected with context and meaning, they became powerful, interactive tools.

Raw mic input has a fascinating quality all to its own. The mind makes a clear connection between the smooth curves of a hum and the harsh jaggies of the spoken word. We used this to forge a literal connection between the performer and game.

FFT (Fast Fourier Transform) analysis gives us a pitch spectrum. Given clear, distinct sound, like a whistle with low background noise, there is a visible peak which marks its pitch. By setting a lower and upper bounds to our spectrum, we can give the performer direct pitch control of anything affected by a normalized value, such as a color gradient.

(whistling low to high)

Root Means Square gives us a loudness index. Not a decibel value per se, it begins at 0 (total quiet) and goes up to (realistically) a value of 3 or 4. As we don't fully understand its dynamic yet, we used an arbitrary value to normalize the input. This could be applied directly to particle scale and emission rate, for example, or as simply a measurement of audience participation.

Finally, by tracking loudness over time, we could check for spikes, indicating (for example) loud claps or stomps.

THE GAME SEQUENCE

We opened on a sky of dark, roiling clouds. We had the audience tap their thighs, simulating the sound of softly falling rain.

(Click here for the animated GIF capture)

When the loudness rose above a target, rain starting falling from the clouds, slowly and then at a faster rate. But when people stopped making sound, the rain slowed to a stop! This established a clear connection between audience and experience. After a few seconds of being "above the threshold," the rain was fully "on." The sound filled the room, and we asked people to be quiet for a moment, to just listen and be.

We asked the audience to clap together on the count of three. When the code detected a tremendous spike in the loudness value, we slowed down the game tremendously (pitching down the rain sound as well) and triggered a bolt of lightning that raced towards the ground. The crowd almost immediately fell silent when the trigger hit, possibly trying to process this novel spectacle.

As the lightning zig-zagged downwards through slow-falling rain, we piped the raw mic input into the line renderer, creating a fascinating connection between voice and visual.

(Click here for the animated GIF capture)

The camera followed the tip of the lightning, the pitched-down rain fading to nothing and creating juicy anticipation, just before it hit a pile of sticks with a boom and flash, bringing time back to normal.

(Click here for the animated GIF capture, which is pretty awesome!)

I handed the mic to our performer (my daughter, stepping up bravely on little notice!), who sang pretty notes and made the fire dance. It was keyed to her loudness: a small sound made small fire, a loud one was larger. But it lacked a certain "oomph..."

(At this point, the goal was to have the performer lead the audience, both singing "ahhh" in a major key and rising in volume until BOOM! The fire would roar to life! This would unify the connection between the performer, audience, and experience. Unfortunately, we ran into an unanticipated limitation of the Mac OS: it can only use one mic input at a time. It RECOGNIZES both but can only read ONE. We hit the spacebar to step forward.)

With the campfire at full strength, the performer's voice had more of an impact. Not only did the flames get larger, but they changed color as well! After a few rounds of this, we gave the mic to a few kids to try it out on their own, thus pulling audience members into "the show."

In the background, a bit of color started to show in the sky. Tribal drums started playing at a slow tempo, backed by a simple chord progression. We encouraged the audience to clap along, and when they did so in time with the music, the sun started to rise. If the audience stopped or was off, it would slide back down. And so the audience worked together to raise up the sun, using one of our few universal "verbs" (clapping) to share one of our few universal concepts: the beauty of a sunrise.

With pulse pounding drum march and soul-lifting chords, the experience came to a close.

RECEPTION

“'The Fire in the Storm' project uniquely integrated game storytelling with audience participation. The prototype demonstration immediately captured the entire audience’s participation and engagement. It was great to see the audience go from piqued curiosity to wide smiles as they realized that they were controlling the story.” - Jay Arrera, Executive Producer at Pipeworks and event judge

“The Hult Center is the largest indoor cultural center in Eugene. It was built in the 80’s and is fighting to stay relevant in a world where low cost entertainment is pervasive. The Hult challenged the local tech community at the ‘2017 Hack for a Cause’ event to create a new digital enablement that would broaden the venue’s audience. 'The Fire in the Storm’ solution reimagined what’s possible in the physical-to-digital medium and engages audiences in a whole new way.” - Matt Sayre, Director at the Technology Association of Oregon and event organizer.

The team is currently exploring ways to utilize this technology to fashion a larger public performance.

CREDITS

Art: Mark Brenneman and Vance Naegle

Code: Ted Brown and Sam Foster

Music & Sound: Michael Jones

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like