Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs.

Bringing VR to Life

This blog briefly covers a few tips that one can put to use when designing and executing their virtual reality experience.

Bringing VR to Life

“On the other side of the screen, it all looks so easy.”

- Kevin Flynn, in Tron (1982)

I fondly remember watching Tron as a child. I was overwhelmed by the potential for endless beauty that could be generated by a computer. I kept dreaming of the day I would live in a world of synthesized light and sound.

Many attempts have been made since those early days of computer graphics to deliver the dream of virtual reality to the masses. Today, cell phone technology is driving down the cost of all computing elements required for a compelling VR experience. The dawn of virtual reality is finally here.

Something magical happens when we achieve the right balance between head movement and visual feedback. The brain believes that the visuals are solid and real. You truly feel as if you are experiencing an alternate reality. Sensor fusion is a term you may hear more of as VR matures. This refers to the algorithms which take readings from the multiple sensors used to track your head movements and fuse them together to properly align the visual experience. Having our visual sense correlate itself with our head movement seems to give the brain a ‘thumbs up’ to its believability. I think that the power of VR will drive us to correlate more of our physical senses together. For example, audio will be spatially modified with head movement and explosions in the virtual world may stimulate haptic sensors attached to our bodies.

Five Tips for VR Creators

The wonderful thing about virtual reality is that it delivers a unique and intuitive way to interact with a computer. Instead of using input devices that we have been accustomed to over the past 30 years (namely the mouse, keyboard, buttons and joysticks), we will be able to use our heads and eventually our whole bodies to navigate any digital landscape we desire. This will, no doubt, open up a world of possibilities for games and other experiences.

Here are a few things to think about when creating your virtual reality masterpiece:

1. Iterate Quickly on New Ideas

When designing in a new medium like VR, the ability to iterate quickly is highly desirable. The reason is that there are many aspects about the virtual world that do not seem apparently wrong until you step inside the virtual environment itself.

For example, one important aspect to take into account is the scale of your world. On a 2D screen, much can be forgiven between the geometric relationships of 3D objects in a virtual world. As soon as we dive into the same world with a VR display, those relationships become more important. Furthermore, simple textures that worked on a 2D screen may appear too grainy and low-resolution in VR, becoming noticeable and possibly distracting.

Being able to iterate on key features that are most important to your overall design and still keep the world highly optimized is a challenge in today’s games and will prove even more so in VR. Using a middleware tool like Unity for quick experimentation enables developers to rapidly iterate to discover best VR experiences. Knowing what doesn’t work in virtual reality will be just as important as knowing what does work. All of this knowledge can only help advance VR into a digital frontier that we can all use to create amazing experiences that were simply impossible until now.

2. New Mechanics for New Input Devices

VR is in its infancy. Aside from head orientation, there is no standard set of interfaces specifically used for VR navigation. We rely mainly on gamepads, mice, and keyboards to select items and for locomotion. Fortunately, there are some unique hardware interfaces currently available which can be used to prototype new VR mechanics today.

The original Microsoft Kinect has been around for quite some time now. It uses structured light (specifically, an infrared laser and CMOS sensor) to create a depth map. This in turn can be used to extract geometric relationships of various objects in the real world, including a player’s skeletal structure. However, its high latency and low resolution mapping are not ideal for truly immersive VR experiences.

The Leap Motion is another device that works off of infrared light and multiple cameras. It is meant for tracking a user's hand movements, and although it has a higher capture resolution than the original Kinect, it has a much smaller range (roughly a hemisphere volume with a radius of about 1/2 meter).

Another device, the Razer Hydra, uses a weak magnetic field to detect absolute position and orientation of both player’s hands. Many excellent demos have paired the Razer Hydra and Oculus Rift together, allowing one to combine positional head tracking and hand awareness. However, the device is subject to magnetic interference and global field nonlinearities, and this can drastically affect the VR experience.

I expect everything will change as VR matures. Highly accurate position and orientation tracking of your head will become standard. After that, hand tracking, and eventually full body awareness (with haptics) will follow. These interfaces will use a myriad of inertial, optical, magnetic, ultrasonic and other sensor types to hone in and fine-tune VR input devices. It will be exciting to see how these new interfaces are used, particularly when coupled with novel experiences which have not yet been explored.

3. A Few Words on Latency

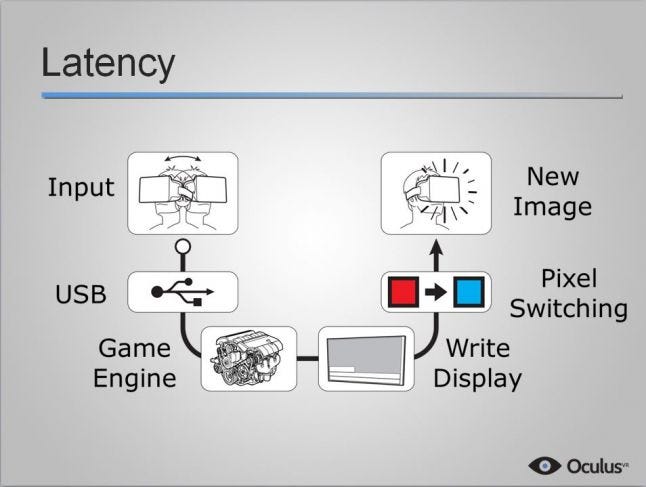

Of all the issues that need to be solved for VR to be believed, latency is one that takes the most precedence. Ideally, the “Motion to Photon” latency (that is, the time your head moves to the time it is represented on the display) should be no greater than 20 milliseconds. Above that, the experience does not feel nearly as real.

On the Rift, the sensors are read and fused together at a very high sampling rate of 1000Hz. All of this happens in 1 millisecond, and the latency incurred by USB is 1 millisecond. However, there are quite a few software and hardware layers sandwiched between the reading of the head tracking sensors and the resulting screen render. Each layer (such as screen update, rendering, pixel switching etc.) incurs some latency. Typically, the total amount of latency is 40 to 50 milliseconds.

“Motion to Photon” layers that may incur latency

Fortunately, much of this latency can be covered up by predicting where your head will be. This is achieved by taking the current angular velocity of your head (found from the gyroscopes of the tracker) and projecting forward in time by a small amount to render slightly in the future so that the user perceives less latency. The Oculus SDK currently reports both predicted and unpredicted orientation, and the predicted results are quite accurate.

An excellent blog post written by Steve Lavalle (Principal Scientist here at Oculus) on VR latency can be found here: http://www.oculusvr.com/blog/the-latent-power-of-prediction/

Using prediction to minimize latency should not be the sole solution to rely upon. As VR systems improve, the layers that were mentioned above will begin to incur less time in the latency pipeline. High frame-rate OLED displays, running at 90Hz or higher, will have an immediate impact on keeping latency low. It will be exciting to see the future technical breakthroughs which will be used to tackle this very important VR issue.

4. Keep the Frame Rate Constant

Equally as important as latency reduction is keeping the visual frame-rate both consistent and as high as the hardware will allow. The LCD panel in the Rift Development Kit refreshes at 60Hz. A drop below 60Hz typically translates into a ‘stuttering’ image, and the effect is jarring enough to hinder the VR illusion.

Stuttering from a fluctuating frame-rate is different from another kind of visual anomaly called ‘judder’. In the context of virtual reality, judder refers to the visual artifacts (perceived smearing and strobing) that are expressed due to the shortcomings of synchronization between the display panel and head / eye movement. Think of it this way: As you move your head around, the image that is displayed on the screen is fixed to a single image for a frame (at 60Hz, that would be approx. 16.7ms). However, your head is still moving within that frame; the eyes and other senses are still integrating together at sub-frame accuracy. When the next frame is rendered, you were staring at the previous frame for a short duration before it pops to the new frame. This constant popping from one frame to another while your head is moving around is what causes judder to occur.

Judder can be corrected, but this requires improvements to be made to display panels used for VR. A great blog post by Michael Abrash talks about judder in more detail: http://blogs.valvesoftware.com/abrash/why-virtual-isnt-real-to-your-brain-judder/

5. Smashing Simulator Sickness

Within the inner ear of humans (and most mammals) lies what is known as the vestibular system. This system contains its own set of sensors (different from the five external senses we all know about) and is the leading contributor to our sense of movement. It is able to register both rotational and positional acceleration.

When we turn our heads, the vestibular system senses rotational acceleration. This sense fuses with the images we are seeing in a heads up display (such as the Rift) and the result is a convincing virtual reality experience.

However, when one of the senses in the vestibular system does not line up with what is being seen, the brain ‘suspects’ something is not right. The result is a potential visceral response to the experience. This sensation is what we refer to as ‘simulator sickness’.

Many possible situations can trigger this sensation to happen. One such case is when the user moves their physical head around but the virtual head position does not get visually tracked. The result is that the positional sensor in the vestibular system is being stimulated but there is no correlation with the visual component. Another is when the user is moving through the environment unexpectedly using an external controller. Here, the visual display may be rotating and moving without corresponding stimulation of the vestibular system.

Simulator sickness can be reduced by keeping the dissonance between the vestibular system and the visual display to a minimum. For example, keeping the user stationary while looking around the scene is a relatively comfortable experience, because the visual and head movements are almost perfectly matched. However, many experiences will require the user to freely move around an environment. Using non-intrusive forms of stimulation (such as audio, visual cues, and haptic interfaces) may give us the ability to reduce and possibly remove simulator sickness entirely. We might even be able to mask simulator sickness by actively engaging the vestibular system to control movement; we could locomote or rotate the player simply by tilting the head. It is this particular area of virtual reality research where experimentation and knowledge sharing will become vital.

A Brave New (Virtual) World

We are at the start of an exciting era in human-computer interaction. Although the current goal is to fuse computer visuals with head motion, it does not stop here. As virtual reality becomes more mature, our other senses will be tapped into as we start to take ‘physical’ form in the virtual worlds we are creating. The ability to use our hands to actually build from inside our virtual creations will no doubt bring forth experiences that we cannot currently imagine. Eventually, our avatars will begin to solidify within the virtual space, and jumping between the physical and virtual world will feel like teleportation.

I, for one, cannot wait to finally be transported to the Grid.

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like