Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs.

Screenspace raytraced reflections are very popular technique that appeared in many recent game titles. Why so many games use it? What are its benefits and disadvantages? Should future games rely on this imperfect solution?

Introduction

The technique was first mentioned by Crytek among some of their improvements (like screenspace raytraced shadows) in their DirectX 11 game update for Crysis 2 [1] and then was mentioned in couple of their presentations, articles and talks. In my free time I implemented some prototype of this technique in CD Projekt's Red Engine (without any filtering, reprojection and doing a total bruteforce) and results were quite "interesting", but definitely not useable. Also at that time I was working hard on The Witcher 2 Xbox 360 version, so there was no way I could try to improve it or ship in the game I worked on, so I just forgot about it for a while.

On Sony Devcon 2013 Michal Valient mentioned in his presentation about Killzone: Shadow Fall [2] using screenspace reflections together with localized and global cubemaps as a way to achieve a general-purpose and robust solution for indirect specular and reflectivity and the results (at least on screenshots) were quite amazing.

Since then, more and more games have used it and I was lucky to be working on one - Assassin's Creed 4: Black Flag. I won't dig deeply into details here about our exact implementation - to learn them come and see my talk on GDC 2014 or wait for the slides! [7]

Meanwhile I will share some of my experiences with the use of this technique and benefits, limitations and conclusions of my numerous talks with friends at my company, as given increasing popularity of the technique, I find it really weird that nobody seems to share his ideas about it...

The Good

Advantages of screenspace raymarched reflections are quite obvious and they are the reason why so many game developers got interested in it:

Technique works with any potential reflector plane (orientation, distance) and every point of the scene being in fact potentially reflective. It works properly with curved surfaces, waves on the water, normal maps and different levels of reflecting surfaces.

It is trivial to implement* and integrate into a pipeline. It can be completely isolated piece of code, just couple of post-effect like passes that can be turned on and off at any time making the effect fully scalable for performance considerations.

Screenspace reflections provide a great SSAO-like occlusion, but for indirect specular that comes from for example environment cubemaps. It will definitely help you with too shiny objects on edges in shadowed areas.

You don't require almost any CPU cost and potentially long setup of additional render passes. I think this is quite common reason to use this techniques - not all games can manage to spend couple millis on doing a separate culling and rendering pass for reflected objects. Maybe it will change with draw indirect and similar techniques - but still just the geometry processing cost on the GPU can be too much for some games.

Every object and material can be reflected at zero cost - you already evaluated the shading.

Finally, with deferred lighting being an industry standard, re-lighting or doing a forward pass for classic planar / cube reflectors can be expensive.

Cubemaps are baked usually for static sky, lighting and materials / shaders. You can forget about seeing cool sci-fi neons and animated panels or on the other hand your clouds or particle effects being reflected.

Usually you apply Fresnel term to your reflections, so highly visible screenspace reflections have a perfect case to be working - most of rays should hit some on-screen information.

(*) When I say it is trivial to implement means you can get a working prototype in a day or so if you know your engine well. However to get it right and fix all the issues you will spend weeks, write many iterations and there for sure will be lots of bug reports distributed in time.

We have seen all those benefits in our game. On this two screenshots you can see how screenspace reflections easily enhanced the look of the scene, making objects more grounded and attached to the environment.

AC4 Screenspace reflections off

AC4 Screenspace reflections off

AC4 Screenspace reflections on

One thing worth noting is that in this level - Abstergo Industries - walls had complex animations and emissive shaders on them and it was all perfectly visible in the reflections - no static cubemap could allow us to achieve that futuristic effect.

The Bad

Ok, so this is a perfect technique, right? Nope. The final look in our game is effect of quite long and hard work on tweaking the effect, optimizing it a lot and fighting with various artifacts. It was heavily scene dependent and sometimes it failed completely. Let's have a look on what can causes those problem.

Limited information

Well, this one is obvious. With all of screenspace based techniques you will miss some information. On screenspace reflections problems are caused by three types of missing information:

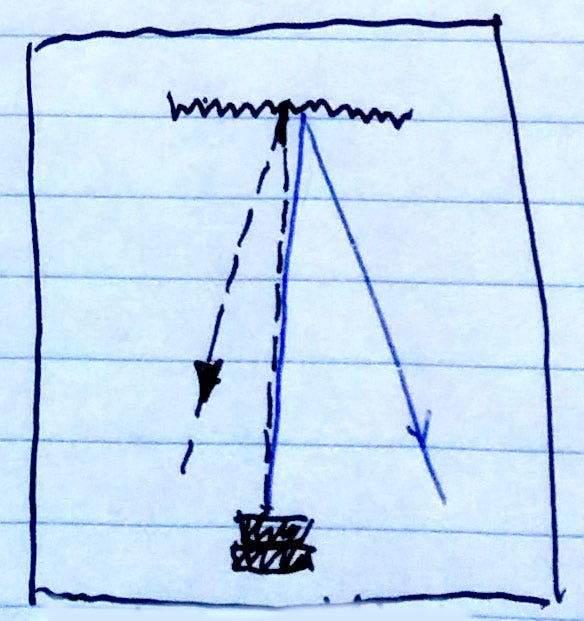

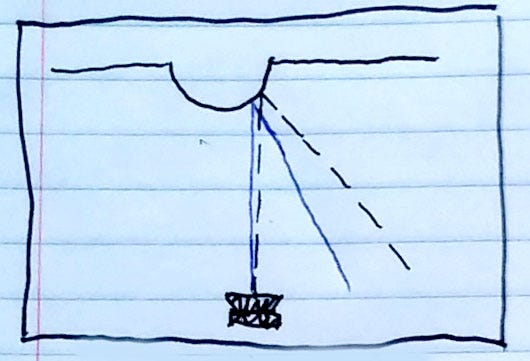

Off-viewport information. Quite trivial and obvious - our rays exit viewport area without hitting anything relevant. With regular in-game FOVs it will often be the case for rays reflected from pixels located near the screen corners and edges. This one is usually least problematic, as you can smoothly blend out the reflections near those corners or if ray faces the camera.

Back or side-facing information. Your huge wall will become 0 pixels is viewed not from the front side and you won't see it reflected... This will be especially painful for those developing TPP games - your hero won't be reflected properly in mirrors or windows. It can be a big issue for some game types (lots of vertical mirror-like surfaces), while for others it may not show up at all (just mildly glossy surfaces or reflections only on horizontal surfaces).

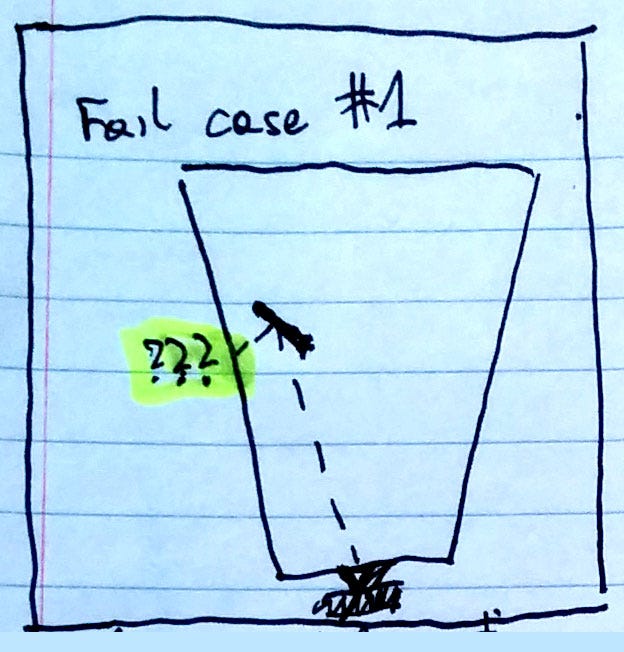

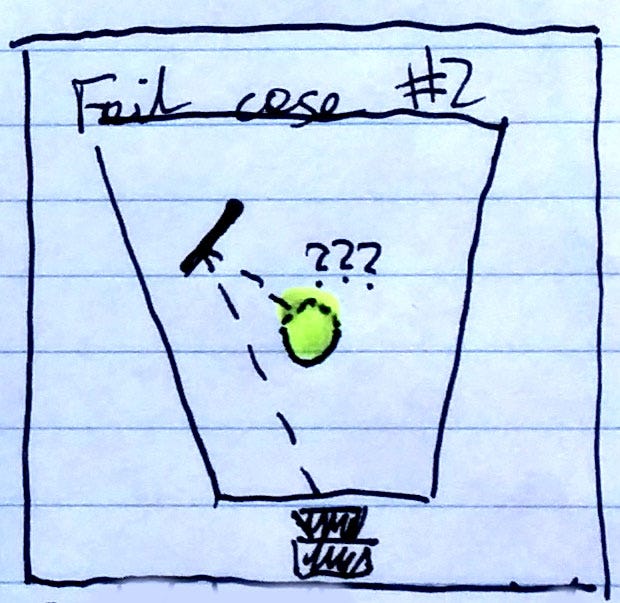

Lack of depth complexity. Depth buffer is essentially a heightfield and you need to assume some depth of objects in z-buffer. Depending on this value you will get some rays killed too soon (causing weird "shadowing" under some objects) or too late (missing obvious reflectors). Using planes for intersection tests and normals it can be corrected, but it still will fail in many cases of layered objects - not to mention the fact of lack of color information even if we know about ray collision. This one is huge and unsolved problem, it will cause most of bad artifacts to appear and you will spend days trying to fix / tweak your shaders.

Ok, it's not perfect, but it was to be expected - all of the screenspace based techniques reconstructing 3D information from depth buffer have to fail sometimes. But is it really that bad? Industry accepted SSAO (although I think that right now we should already be transiting to 3D techniques like the one developed for The Last of Us by Michal Iwanicki [3]) and its limitations, so what can be worse about SSRR? Most of objects are non-metals, they have high Fresnel effect and when the reflections are significant and visible, the required information should be somewhere around, right?

The Ugly

If some problems caused by lack of screenspace information were "stationary", it wouldn't be that bad. The main issues with it are really ugly.

Flickering.

Blinking holes.

Weird temporal artifacts from characters.

I've seen them in videos from Killzone, during the gameplay of Battlefield 4 and obviously I had tons of bug reports on AC4. Ok, where do they come from?

They all come from lack of screenspace information that is changing between frames or changes a lot between adjacent pixels. When objects or camera move, the information available on screen changes. So you will see various noisy artifacts from the variance in normal maps. Ghosting of reflections from moving characters. Suddenly appearing and disappearing whole reflections or parts of them. Aliasing of objects.

Flickering from variance in normal maps

All of it gets even worse if we take into account the fact that all developers seem to be using partial screen resolution (eg. half res) for this effect. Suddenly even more aliasing is present, more information is not coherent between the frames and we see more intensive flickering.

Flickering from geometric depth / normals complexity

Obviously programmers are not helpless - we use various temporal reprojection and temporal supersampling techniques [4], (I will definitely write a separate post about them! As we managed to use them for AA and SSAO temporal supersampling) bilateral methods, conservative tests / pre-blurring source image, do the screenspace blur on final reflection surface to simulate glossy reflections, hierarchical upsampling, try to fill the holes using flood-fill algorithms and finally, blend the results with cubemaps.

It all helps a lot and makes the technique shippable - but still the problem is and will always be present... (just due to limited screenspace information).

The future?

Ok, so given those limitations and ugly artifacts/problems, is this technique worthless? Is it just a 2013/2014 trend that will disappear in couple years?

I have no idea. I think that it can be very useful and definitely I will vote for utilizing it in the next projects I will be working on. It never should be the only source of reflections (for example without any localized / parallax corrected cubemaps), but as an additional technique it is still very interesting. Just couple guidelines on how to get best of it:

Always use it as an additional technique, augmenting localized and parallax corrected baked or dynamic / semi-dynamic cubemaps. [8] Screenspace reflections will provide an excellent occlusion for those cubemaps and definitely will help to ground dynamic objects in the scene.

Be sure to use temporal supersampling / reprojection techniques to smoothen the results. Use blur with varying radius (according to surface roughness) to help on rough surfaces.

Apply proper environment specular function (pre-convolved BRDF) [5] to this stored data - so they match your cubemaps and analytic / direct speculars in energy conservation and intensity and whole scene is coherent, easy to set up and physically correct.

Think about limiting the ray range in world space. This will serve as an optimization, but also as some form of safety limits to prevent flickering from objects that are far away (and therefore could have tendency to disappear or alias).

Also some research that is going on right now on topic of SSAO / screen-space GI etc can be applicable here and I would love to hear more feedback in the future about:

Caching somehow the scene radiance and geometric information between the frames - so you DO have your missing information.

Reconstructing 3D scene for example using voxels from multiple frames' depth and color buffers - while limiting it in size (eviction of too old and potentially wrong data).

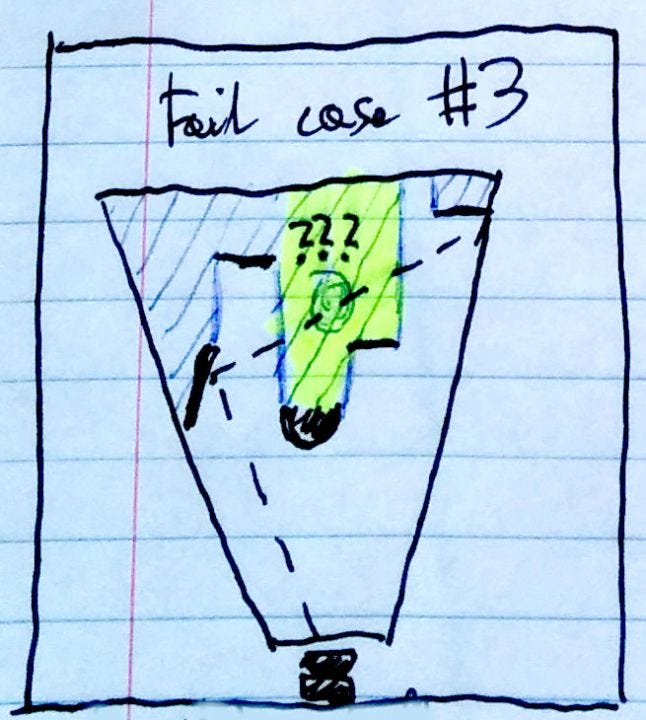

Using scene / depth information from additional surfaces - second depth buffer (depth peeling?), shadowmaps or RSMs. It could really help to verify some assumptions we take about for example object thickness that can go wrong (fail case #3).

Using lower resolution 3D structures (voxels? lists of spheres? boxes? triangles?) to help guide / accelerate the rays [6] and then precisely detect the final collisions using screenspace information - less guessing will be required and maybe the performance could be even better.

As probably all of you noticed, I deliberately didn't mention the console performance and exact implementation details on AC4 - for it you should really wait for my GDC 2014 talk. :)

Anyway, I'm really interested in other developer findings (especially the ones that already shipped their game with similar technique(s)) and can't wait for bigger discussion about the problem of handling indirect specular BRDF part, often neglected in academic real-time GI research.

References

[1] http://www.geforce.com/whats-new/articles/crysis-2-directx-11-ultra-upgrade-page-2/

[2] http://www.guerrilla-games.com/presentations/Valient_Killzone_Shadow_Fall_Demo_Postmortem.html

[3] http://miciwan.com/SIGGRAPH2013/Lighting%20Technology%20of%20The%20Last%20Of%20Us.pdf

[4] http://directtovideo.wordpress.com/2012/03/15/get-my-slides-from-gdc2012/

[5] http://blog.selfshadow.com/publications/s2013-shading-course/

[6] http://directtovideo.wordpress.com/2013/05/08/real-time-ray-tracing-part-2/

[7] http://schedule.gdconf.com/session-id/826051

[8] http://seblagarde.wordpress.com/2012/11/28/siggraph-2012-talk/

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like