Here we have a host of stories from devs across the industry (and across the years/platforms) about less-than-righteous methods used to fit levels, textures, & entire games into their required spaces.

Memory constraints are a thing of the past, right?

Turns out they’re not. Not only do many off-the-shelf engines manage memory poorly, many platforms still have some rather aggressive memory requirements. Then there are disc and cartridge-based size limitations on top of that.

Here we have a host of stories from across the industry (and across the years/platforms) about less-than-righteous methods used to fit levels, textures, and entire games into their required spaces. They may not be pretty, but they got games shipped, and nobody was the wiser… Until now.

And if you enjoy these stories, make time to check out our feature from last month in which a bunch of your fellow game developers shared the most memorable dirty coding tricks that helped them get games out the door.

About that loading screen

The game in question (a first person shooter) had issues unloading levels cleanly on Xbox; some memory could not be reclaimed, so after finishing a level and going into the next one, the game would always crash. The Xbox had very limited memory, and unlike PC, when a program runs out of memory, it does not have extra hidden slow storage to use as a backup. It's immediate death.

The team actually noticed this very late, because they had the ability to directly start the game into a level. This was a feature of the game editor which allowed the programmers and designers to jump directly into the level they were working on, bypassing the main menu and the previous missions. This is a vital feature of in-development games, one so common that I have never seen a game without it (although it is often stripped before shipping).

"When finishing a level, the game would reboot the console and restart itself with a command-line argument (the name of the level to start). Of course, a reboot means a black screen for a while, so a fading screen was implemented quickly to transition to black."

As a result, everybody started the game from the editor directly into a level out of convenience. Except of course the ones developing the menu, but they would only start the main menu and never enter a level. So most developers on the project were not immediately aware that levels could not be cleaned up quickly.

When QA discovered this, it became quite a challenge to clean up all the leaks that late in the project. The first leaks were easy to find, but it became increasingly challenging to hunt down and reclaim every little piece of memory before starting a new level. After some work, one could start 4-5 levels in a row, but eventually the console would just crash. It was not possible to play the campaign in one go.

The team did not manage to fix everything in time. Or maybe they gave up quite early, I am not sure... But they used their development feature, and a nice API that existed on the Xbox. It was possible then (and is still possible on the Xbox 360) to ask the console to reboot itself. And it is possible to tell the Xbox what to do when it is done rebooting.

So it was possible to ask the console to reboot and restart the same game, with a parameter. And so the quick-level-starting was moved from the editor to the game itself. When finishing a level, the game would reboot the console and restart itself with a command-line argument (the name of the level to start). Of course, a reboot means a black screen for a while, so a fading screen was implemented quickly to transition to black. The console would reboot and jump into the next level, just as the game editor could do, and then fade into the new level. Voila, the perfect(?) way to clear all memory between levels.

- Nicolas Mercier

RAM and Crash

I was one of the two programmers (along with Andy Gavin) who wrote Crash Bandicoot for the PlayStation 1.

RAM was still a major issue even then. The PS1 had 2MB of RAM, and we had to do crazy things to get the game to fit. We had levels with over 10MB of data in them, and this had to be paged in and out dynamically, without any "hitches"—loading lags where the frame rate would drop below 30 Hz.

It mainly worked because Andy wrote an incredible paging system that would swap in and out 64K data pages as Crash traversed the level. This was a "full stack" tour de force, in that it ran the gamut from high-level memory management to opcode-level DMA coding. Andy even controlled the physical layout of bytes on the CD-ROM disk so that—even at 300KB/sec—the PS1 could load the data for each piece of a given level by the time Crash ended up there.

I wrote the packer tool that took the resources—sounds, art, lisp control code for critters, etc.—and packed them into 64K pages for Andy's system. (Incidentally, this problem—producing the ideal packing into fixed-sized pages of a set of arbitrarily-sized objects—is NP-complete, and therefore likely impossible to solve optimally in polynomial—i.e., reasonable—time.)

Some levels barely fit, and my packer used a variety of algorithms (first-fit, best-fit, etc.) to try to find the best packing, including a stochastic search akin to the gradient descent process used in Simulated annealing. Basically, I had a whole bunch of different packing strategies, and would try them all and use the best result.

The problem with using a random guided search like that, though, is that you never know if you're going to get the same result again. Some Crash levels fit into the maximum allowed number of pages (I think it was 21) only by virtue of the stochastic packer "getting lucky." This meant that once you had the level packed, you might change the code for a turtle and never be able to find a 21-page packing again.

There were times when one of the artists would want to change something, and it would blow out the page count, and we'd have to change other stuff semi-randomly until the packer again found a packing that worked. Try explaining this to a crabby artist at 3 in the morning.

By far the best part in retrospect—and the worst part at the time—was getting the core C/assembly code to fit. We were literally days away from the drop-dead date for the "gold master"—our last chance to make the holiday season before we lost the entire year—and we were randomly permuting C code into semantically identical but syntactically different manifestations to get the compiler to produce code that was 200, 125, 50, then 8 bytes smaller. Permuting as in, "for (i=0; i < x; i++)"—what happens if we rewrite that as a while loop using a variable we already used above for something else? This was after we'd already exhausted the usual tricks, e.g., stuffing data into the lower two bits of pointers (which only works because all addresses on the R3000 were 4-byte aligned).

Ultimately Crash fit into the PS1's memory with 4 bytes to spare. Yes, 4 bytes out of 2097152. Good times.

- Dave Baggett

inky.com (and Naughty Dog employee #1)

[Originally posted here!]

An eye for detail

This happened like 10 years ago. At that time I was working in a small studio, on a RTS game shipping exclusively on PC. It was a mid-sized team, (about 35 people) for roughly a year and a half of production.

This RTS was level based: whenever you beat a level, it was unlocking the next one, and so on. As with any PC game, it was meant to run on several types of configuration, so we were shipping the game with 3 sets of textures of different resolutions: low, medium, and high.

So each level came with two additional packs of texture, one for the medium resolution textures, and another one for the high res ones (low ones were packaged directly in the main bigfile).

"Recorded voices in German last longer and take more disk space than other languages. All our budgets have been established with other languages in mind. We now have roughly 10 hours to fix the problem."

Production went pretty well, and the closing of the game was almost done. Performance was fine, stability was there, most bugs were fixed. Then comes the final day before the deadline. We have to burn our final gold master CDs for every SKU, in order to send them to the factory first thing in the next morning.

So we started by building ISO and burning English, French, and Spanish, and testing them. Everything was going smoothly. And then comes the German SKU! We started building the ISO, and "This image does not fit on the media" popped up on the screen.

What? How is this possible? We just burned 3 other SKUs, and it's now 8pm. The CDs need to be sent at 7am the next day. Looking closer to the problem, it happens that recorded voices in German last longer and take more disk space than other languages. All our budgets have been established with other languages in mind. We now have roughly 10 hours to fix the problem, burn that CD, and test it to make sure it actually works. There is no time to re-compress the audio or do any clever other changes to save some disk space.

Then we got a brilliant idea: choose one of our levels, remove the high resolution texture pack, and replace it with a copy of the medium texture pack. BAM, 50 MB saved: ISO fits on the CD. So our German friends with powerful PCs have played one of our levels with same details of texture as the one with a medium configuration! I can tell you, this was a very long and stressful night!

- Rémi Quenin

Engine architect, Far Cry

It’s not the size...

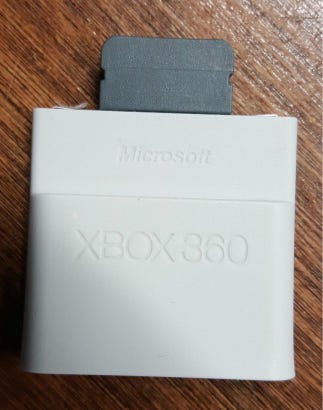

We were working on compliance for a downloadable XBLA title in 2008. Back then, hard drives were optional on the 360 so downloadable titles had to work when launched off of memory cards. This particular game was pretty small- clocking in at around 240mb, which meant we had to test the product on the 256mb and 512mb memory cards.

We were working on compliance for a downloadable XBLA title in 2008. Back then, hard drives were optional on the 360 so downloadable titles had to work when launched off of memory cards. This particular game was pretty small- clocking in at around 240mb, which meant we had to test the product on the 256mb and 512mb memory cards.

The game launched fine off the 512mb card, but we were getting periodic, inconsistent system lock-ups when attempting to launch off of the 256mb card. We wracked our brains for a fix, but ultimately decided that our coding efforts would be best spent making the game as good as possible instead of chasing down some ghost in the machine.

So we shoved a 20mb music file into the game data, pushing the total file size beyond 260mb. This totally precluded us from having to involve the 256mb memory card in the submission process. It was a good game that we shipped on time. Microsoft and our customers were none the wiser.

– Anonymous

Heaps of pain

In a prominent action game port from PC to PS2, we had lots of fun getting a PC dynamic-allocation-heavy 256MB game to fit in 32MB – even after lots of optimizations and adding level-streaming. It was still oversized, so:

The build machine would load a level from boot and track every allocation, and see which survived to level start – re-running the game would then use this sequence to linearly allocate every permanent allocation, and heap/temp alloc the rest. This saved up to ~15% (~5MB) of memory, sped up allocation considerably, and reduced fragmentation hugely, but there was still too much fragmentation after ~3 levels, so:

The build machine had a second step: reboot, load level (with optimizations from (a)), then dump the entire heap at level start to disk. The shipped game then just loaded these memory images directly over the top of the heap (having temporarily stashed local profile settings on the stack) on each level load.

Result: Very fast level load and zero fragmentation at start of each level, at the cost of longer build times for each release candidate.

- Anonymous

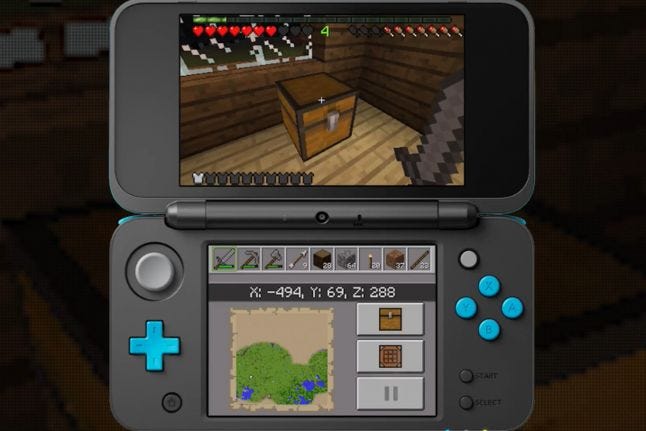

Minecram

Throughout the development of Minecraft for 3DS we were hurting for memory, even on the more powerful New 3DS. So we wanted to play around with some of the texture formats the 3DS supported. The 3DS's internal texture format is weird. It's tile-based, organized in a zig-zag pattern of zig-zag patterns, but then organized linearly at the highest level.

Unfortunately, nobody on the team was familiar enough with the compressed formats to write a conversion utility. Another programmer, Ian, had previously written a texture converter for Mega Man Legacy Collection, but that was more about uncompressed pixel data.

That custom texture converter of his took a .png and spit out a ".3dst" file with a custom format that he invented – it was essentially a minimal header plus raw data we could just blast into memory ready to go ("3dst" stands for "3DS Texture"-- pretty clever, eh?).

Nintendo provided their own conversion utility but it only exported images into a "package" file which you had to use Nintendo's library to parse and load at runtime. That was too much overhead for us. Unfortunately again, that file format was undocumented by Nintendo and this appeared to be the only way to get compressed images arranged in the format the 3DS expected.

So I decided to get my hands dirty in a hex editor. I fed Nintendo's utility various images of different sizes and formats and made note of what changed in the header of their files until I had identified enough fields to rustle out the data I needed. I threw together a quick utility to extract the raw data from Nintendo's package files, then put together a batch script to apply this process to the textures we needed. It wasn't fast, and it certainly wasn't elegant, but it worked.

- Keith Kaisershot

Programmer, Digital Eclipse

You brew it

When working on a 3D racer for the brew platform, the heap was contained inside the data section of the executable. It was an elf-like executable format called mod, and it contained a huge chunk of 0s where the application would allocate memory once it was loaded into memory.

We were regularly running out of heap memory as a result, and rather than manage memory properly (resources were very tight on this platform) I decided to write a tool to insert more zeros and patch up the .exe instead. It worked a treat and I don’t even feel bad about it because it was a terrible platform and a terrible executable format.

- Andrew Haining

About the Author(s)

You May Also Like