Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs.

In Part 1, I gave a quick look at what the WorldShape tool can do, but how does it actually work? How might you create your own similar tools?

In Part 1, I gave a quick look at what the WorldShape tool can do, but how does it actually work? How might you create your own similar tools? I won’t be able to explain every aspect of Unity, obviously, but I’ll try to point you in the right direction.

The bulk of the code is split between the WorldShape Component and the WorldShapeInspector “custom editor.” Let’s dive right in!

WORLDSHAPE COMPONENT

As I mentioned before, Components are the heart of Unity’s extensions, both at runtime and edit time. A Component is created by deriving from the MonoBehaviour class, which comes with a few benefits right out of the box:

It lets you attach instances of that Component to any GameObject in the Scene.

It automatically lets you see and change public properties of the Component in the Inspector view. In the visual aid above, you see these properties, like “Has Collider” and “Debug Mesh,” which I will talk about later.

Before we can make an editor, we need some data to actually edit. A 2D shape is really just a series of points in space connected by lines, so a WorldShape’s primary purpose is to be a list of points. We can do this a number of ways, but I chose to use Unity’s hierarchy to our advantage. A WorldShape Component looks at its direct children and treats them as vertices. Their order as children and position in space determine where the shape’s vertices exist. Even without the WorldShape editing tool shown above, we can select individual vertices and move them around using Unity’s built-in tools to change the shape.

But wait, where did those child vertices come from? All we did was add a WorldShape Component...

Normally, when you implement functions on a Component like Start or Update, these functions are only called when the game is running, not when editing. Fortunately, there is an attribute that can be added to Component class definitions, called ExecuteInEditMode that forces these functions to be called when the game is not running.

WorldShape uses ExecuteInEditMode to make sure the Start function gets called when a new WorldShape is created. Start is then used to get the vertices on this shape. If it finds zero vertices, it helpfully creates a starter set of four vertices laid out as a square.

ExecuteInEditMode also causes the Update function to be called every time the editor redraws itself.Update can then respond to values that may have been changed by the user, such as “Has Collider” and “Debug Mesh” being set to true or false. In this case, changing either of those values means we want to add, remove, or update additional data and components on the WorldShape, as seen here:

Most of what’s happening here is a result of Update being called as the screen redraws. A PolygonCollider2D Component is added, updated, and removed as boxes are checked and vertices are changed. If this shape is used to represent a trigger volume later on, being able to automatically keep a collider in sync with the shape is critical.

The Debug Mesh properties determine if and how a mesh will be created and triangulated to match the shape. Each change to the shape or the debug mesh properties can trigger a re-triangulation, or a re-creation of the mesh data, such as its vertex colors or UV mappings for the checkerboard material. This is all useful for a level designer creating geometry without art. Being able to actually see the shapes in game is important (so they tell me). The checkerboard texture helps the designer understand how large the shape is at a glance, which is necessary when designing for jump distances and clearance. The color tints allow for a quick assignment of meaning to an otherwise bland shape: Red means danger, green means healing, purple means puzzle trigger, etc.

We even see the shape being changed when one of its child vertices is selected and moved instead. This is because the Update function is run no matter what is selected, so it gives us a chance to re-evaluate any changes to the shape that may have occurred externally.

One thing to be very careful about when using ExecuteInEditMode is to make sure your editor-only code is truly editor-only. You have two ways of accomplishing this:

If you DO need Update called when the game is running to do something different, you should test against the Application.isPlaying boolean. This boolean is accessible from anywhere and will be FALSE when you are in editor mode.

If you DON’T need Update called when the game is running, you can surround it with an #ifUNITY_EDITOR / #endif preprocessor macro block. This will make sure the code simply doesn’t exist in the published version, so there will be useless code in your build.

Now, not counting the wireframe shape editor, we already have a ton of power inside a single Component. What if we add more Components?

WorldShape is just that -- a shape in the world. It doesn’t do anything on its own. Yes, we can add colliders and meshes, but even the collider doesn’t act the way we want out of the box. So what do we do when we want to change the meaning of a shape? We add more components!

Here’s a WorldShapeSolid component, which makes the shape act like blocking terrain:

Here’s a WorldShapeTrigger component, which registers the shape with the world as a trigger volume that can be passed through:

And finally, here’s a WorldShapeClone that can reference another WorldShape in order to duplicate its vertex set in a new position:

Each of these components relies on an existing WorldShape. WorldShapeSolid and WorldShapeTrigger use a different technique to update after their host shape has changed. Whenever a WorldShape changes, it uses GameObject.SendMessage to dispatch a function call toother components on the same GameObject.

GameObject.SendMessage is normally used at runtime to allow components to communicate with each other without knowing if the message will be received. Interestingly for us, it also works at edit time if ExecuteInEditMode is present on both the sending and receiving components. WithGameObject.SendMessage, we can have the WorldShape update itself completely before then letting other components know if something changed, so they can respond.

WorldShapeSolid uses this message to “repair the edges” of a shape by adding and configured WorldShapeEdge and EdgeCollider2D components.

WorldShapeTrigger uses this message to force “Has Collider” to be true, as well as configuring certain properties of the PolygonCollider2D so it works correctly with our characters.

WorldShapeClone is a bit different. Since it exists on a separate GameObject, it uses the familiar Update function to grab information from its source shape.

WORLDSHAPEINSPECTOR

So far, we’ve been able to see some pretty neat features using JUST components. What happens when we go a step further and actually extend the editor?

In Unity, a “custom editor” can mean a few things. In this case, it means a class that extends theEditor base class. This is a bit of a misnomer because an Editor sub-class is normally used to create the block of GUI that shows up in the Inspector for a particular Component, so I tend to call them custom inspectors. A WorldShapeInspector is a custom inspector for WorldShape Components, obviously! Much more information about the basics of creating custom editors can be found in theUnity Manual and around the web.

Most custom inspectors will implement the Editor.OnInspectorGUI function, which lets you augment or replace the default inspector GUI in the Inspector View. I ended up not using this function at all, opting instead to implement the Editor.OnSceneGUI function, which allows you to render into the Scene View whenever a GameObject with a WorldShape component is selected. This is the basis for our wireframe shape editor.

Here is the OFFICIAL documentation for Editor.OnSceneGUI: “Lets the Editor handle an event in the scene view. In the Editor.OnSceneGUI you can do eg. mesh editing, terrain painting or advanced gizmos If call Event.current.Use(), the event will be "eaten" by the editor and not be used by the scene view itself.”

This is, frankly, a useless amount of information if you’re just getting started. What you really need to know is how Events work. The manual has this gem of information: “For each event OnGUI is called in the scripts; so OnGUI is potentially called multiple times per frame. Event.current corresponds to "current" event inside OnGUI call.”

So from this, we discover that Editor.OnSceneGUI gets called many times based on different events, and we can access the event via the static Event.current variable and check its type and other properties.

Okay, but how do we make that wireframe editor thingy?

Well, Unity has a few built-in tricks called Handles. In short, Handles is a class with a bunch of static functions that process different types of events to create interactive visual elements in the Scene View. Handles are “easy to use” but are far more complicated to actually understand, which you need to do if you hope to master them and create your own. It boils down to knowing how and when events are delivered, and what to do with each one. Understanding the event system is really the first key to understanding powerful editor extension.

I used Handles extensively for editing WorldShapes, as they are the most obvious and direct way to manipulate the shapes. There are five different types of handles used in the WorldShape editor:

Handles.DrawLine is used to draw the actual edges of the shape. This built-in function just draws a line with no interaction.

Handles.Label is used to draw the MxN size label at the shape’s pivot. Similar toHandles.DrawLine, this just draws some text into the Scene View with no interaction.

A custom handle was created for moving parts:

Solid circular handles are shape vertices.

Solid square handles are shape edges.

The semi-filled square handle that starts in the center is the shape’s pivot.

The Handles API comes with a very useful Handles.FreeMoveHandle function that I ended up duplicating for our custom moving handle. I did this for a few reasons, but the two most important were (1) I needed snapping to work differently, and (2) I wanted to know how to do it.

Unfortunately, explaining everything about Handles in detail here would be very dry, but if you’re interested in using Handles, I would HIGHLY recommend grabbing ILSpy to take a peek inside a good portion of the Unity Editor source code. It helps me tremendously every time I want to know how something is done “officially.”

At a high level, making a custom Handle involves understanding Events, Graphics, and “control IDs.”

Events will be “sent” to your function automatically via the static Event.current variable. Each event object has an EventType stored in the Event.type variable. The two most important event types you should familiarize yourself with are:

EventType.Layout -- This is the first event sent during a repaint. You use it to determine sizes and positioning of GUI elements, or creation of control IDs, in order for future events to make sense. For example, how do you know if a mouse is inside a button if you don’t know where the button is?

EventType.Repaint -- This is the last event sent during a repaint. By this point, all input events should have been handled, and you can draw the current state of your GUI based on all of the previous events. Handles.DrawLine only responds to this event, for example. There are many ways to draw to the screen during this event, but the Graphics API gives you the most control.

All other event types happen between these two, most of which are related to mouse or keyboard interactions. You can accomplish a lot with just this information, but in order to deal with multiple interactive objects from a single Editor.OnInspectorGUI call, you need to use “control IDs.”

Control IDs sound scary, but they’re really just numbers. Using them correctly is currently not well documented, so I’ll do my best to explain the process:

Before every event, Unity clears its set of Control IDs.

Each event handling function (Editor.OnInspectorGUI, Editor.OnSceneGUI, etc.) must request a Control ID for each interactible “control” in the GUI that can respond to mouse positions or keyboard focus. This is done using the GUIUtility.GetControlID function. Because this must be done for every event, the order of calls to GUIUtility.GetControlID must be the same during every frame. In other words, if you get a control ID during the Layout event, you MUST get that same ID for every other event until the next Layout event.

During the EventType.Layout event inside Editor.OnInspectorGUI, you can use theHandleUtility.AddControl function to tell Unity where each Handle is relative to the current mouse position. This part is where the “magic” of mapping mouse focus, clicks, and drags happens.

During every event, use the Event.GetTypeForControl function to determine the event type for a particular control, instead of globally. For example, a mouse drag on a single control might still look like a mouse move to all other controls.

I promise it’s easier than it sounds. As proof, here’s code that demonstrates how to properly register a handle control within Editor.OnSceneGUI:

int controlID = GUIUtility.GetControlID(FocustType.Passive);

Vector3 screenPosition = Handles.matrix.MultiplyPoint(handlePosition);

int controlID = GUIUtility.GetControlID(FocustType.Passive);

Vector3 screenPosition = Handles.matrix.MultiplyPoint(handlePosition);

switch (Event.current.GetTypeForControl(controlID))

{

case EventType.Layout:

HandleUtility.AddControl(

controlID,

HandleUtility.DistanceToCircle(screenPosition, 1.0f)

);

break;

}Not so bad, right? The worst part of that is the HandleUtility.DistanceToCircle call, which takes the screen position of the handle and a radius, and determines the distance from the current mouse position to the (circular) handle. With this, you have a Handle in the Scene View that can recognize mouse gestures, but it doesn’t do anything yet.

To make it do something, we can add code for the appropriate mouse events to the switch statement:

case EventType.MouseDown:

if (HandleUtility.nearestControl == controlID)

{

// Respond to a press on this handle. Drag starts automatically.

GUIUtility.hotControl = controlID;

Event.current.Use();

}

break;

case EventType.MouseUp:

if (GUIUtility.hotControl == controlID)

{

// Respond to a release on this handle. Drag stops automatically.

GUIUtility.hotControl = 0;

Event.current.Use();

}

break;

case EventType.MouseDrag:

if (GUIUtility.hotControl == controlID)

{

// Do whatever with mouse deltas here

GUI.changed = true;

Event.current.Use();

}

break;You’ll see a few new things in that snippet:

HandleUtility.nearestControl [undocumented] -- This is set automatically by Unity to the control ID closest to the mouse. It knows this from our previous HandleUtility.AddControl call.

GUIUtility.hotControl -- This is a shared static variable that we can read and write to determine which control ID is “hot,” or in use. When start and stop a drag operation, we manually set and clear GUIUtility.hotControl for two reasons:

So we can determine if our handle’s control ID is hot during other events.

So every other control can know that it is NOT hot, and should not respond to mouse events.

Event.Use -- This function is called when you are “consuming” an event, which sets its type to “Used.” All other GUI code should then ignore this event.

I mention that “drag starts / stops automatically” in the comments, which meansEventType.MouseDrag events will automatically be sent instead of EventType.MouseMovewhen a button is held down. You are still responsible for checking hot control IDs in order to know what handle is being dragged.

I didn’t put any useful code in there because at this point, the world is your oyster. Need to draw something? Add a case EventType.Repaint: block and draw whatever you like. Want to repaint your handle every time the mouse moves? Add a case EventType.MouseMove: block and callSceneView.RepaintAll [undocumented], which will force the Scene View to… repaint all!

With the Handles business out of the way, the rest of my Editor.OnSceneGUI function is about 200 lines long. In that 200 lines, I do the following:

Draw lines between every vertex using the Handles.DrawLine function.

Draw handles for every vertex that respond to mouse drags, snapping the resulting position and setting it back into the vertex position.

Draw handles for every edge (each adjacent pair of vertices) that respond to mouse drags, snapping the resulting position and applying the change in position to both vertices.

Respond to keyboard events near any of those handles to split edges or delete edges and vertices.

Draw a handle at the shape’s pivot position, that can be dragged to move the shape or shift-dragged to move the pivot without moving the vertices.

Calculate the shape’s bounding box and display it using the Handles.Label function.

Lastly, I use Unity’s built-in Undo functionality to make sure every one of those operations can actually be rolled back.

...and that is really it. There were a few stumbling blocks with Undo and some creating / deleting operations, but everything was smooth sailing once the handles were in place. Normally you wouldn’t have to write your own. Hopefully this has given you some insight into mastering the system, though, so you can make your own tools.

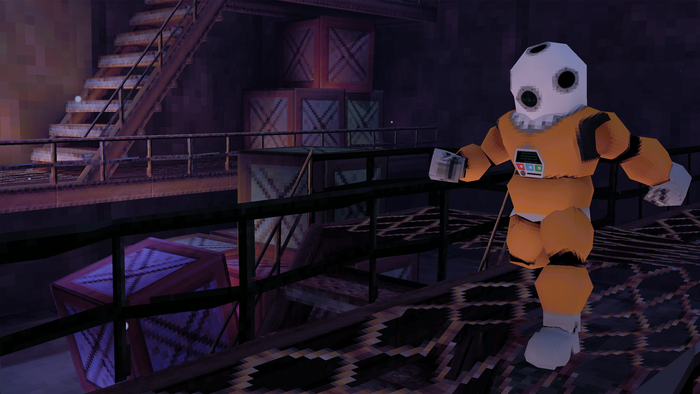

If you’ve made it this far, please enjoy this demonstration of one of our newer tools, the World Editor. This tool lets us fluidly create, change, and move between different “room” scenes that compose our streamed world using many other editor extension tricks. Perhaps a topic for another day?

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like