Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs.

A technical overview of how Epic integrated the Oculus Rift hardware into their latest technology: Unreal Engine 4.

With the release of the Oculus Rift hardware, consumer-grade virtual reality experiences are finally becoming viable. Far from the blocky, abstract VR visions of the future from ‘90s, the Rift allows any existing engine to display its 3D content on their hardware. This opens the door for amazing possibilities of immersive gaming and storytelling using all the tricks of the trade we’ve developed as an industry over the past decades.

Background

After having a successful integration of the Rift hardware with Unreal Engine 3, and seeing all the great work being done with it on titles like Hawken, it only made sense that we should add support to our latest technology, Unreal Engine 4. Being a fan of the Rift technology myself, I decided to begin integrating the Oculus SDK into UE4 during one of our “Epic Fridays,” where developers can spend a day working on any type of interesting project or problem. As it turns out, that was enough time to get an initial implementation up and running in the engine!

Oculus integration is surprisingly simple for how cool the result is. To get the Rift up and running, there are three primary tasks: adding stereoscopic 3D support, compensating for lens distortion and reading from the orientation sensors.

Step 1: Let There Be 3D!

A few years ago, there was a huge push in consumer electronics to deliver “true 3D” experiences. While there are many different technologies out there, such as glasses for 3D TV or the Nintendo 3DS’s parallax system, the one thing they have in common is they are all examples of stereoscopic 3D.

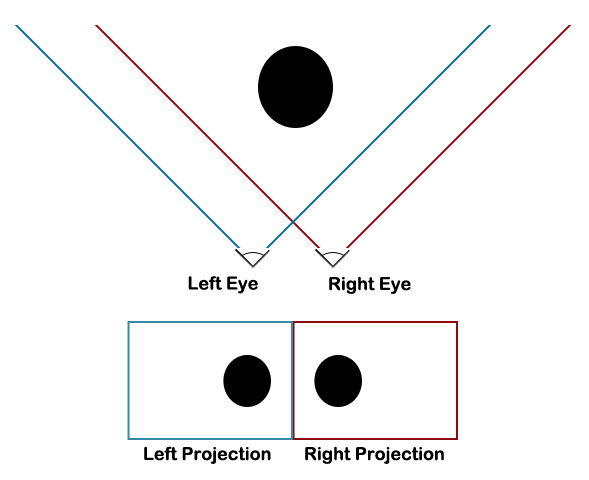

We humans perceive depth in part by processing the subtle differences in images that are interpreted by our left and right eyes. Because they’re separated in space by a distance known as the interpupillary distance (IPD), the image received by each eye is offset differently in each field of view, as seen in the image below:

It’s easy to observe this effect in real life by looking at an object in the distance, so that your eyes are pointed roughly parallel. Hold up a finger in front of your face while still looking at the distant object, and you’ll notice that you’ll see a double image of your finger, which becomes increasingly separated the closer you move your finger towards your face.

The Oculus exploits this data to create a 3D world view by having each eye look at one half of the full screen. On the engine side, we do two scene rendering passes, one for each eye, where we offset the in-game camera to the left and right of the view center by an amount that corresponds to the in-game equivalent of the offset between the lenses in the Rift hardware itself. The math behind this isn’t terribly complicated, but it is a little beyond the scope of this post. Thankfully, the team at Oculus has a very good overview at their developer site on how to calculate the proper rendering offsets, and create appropriate projection matrices to render on to the device.

For UE4, we created a generic stereoscopic rendering interface, which enables us to define a few functions to calculate the stereoscopic view offset and the projection matrices. The Oculus SDK helps you to obtain all the measurements needed to calculate these, so in our Rift-specific stereoscopic view implementation, we simply read the configuration from the device, and return the calculated values.

For debugging purposes, we also made a “dummy device” renderer, which used the same values as the Rift, but didn’t require a device to be connected. This made it far easier to debug multiple-pass renderer bugs, because we could debug them without the device. With this step all said and done, we end up with something like this:

If you cross your eyes so that the two images converge to one image, you can see the depth in the above in-game screenshot.

Step 2: Distortion

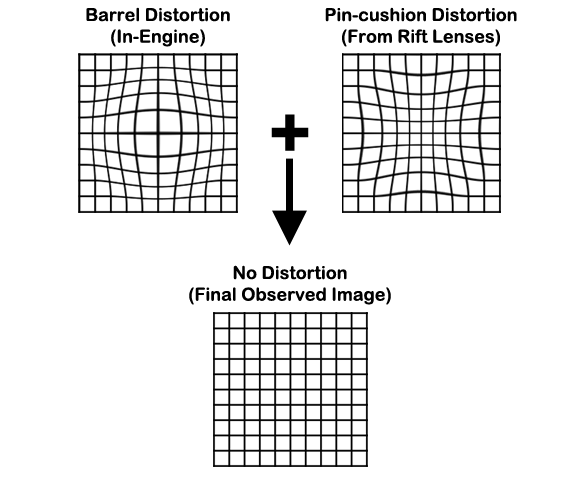

After tuning our stereoscopic rendering, we could actually put on a Rift headset and see a 3D image, which is a pretty good first step. However, the image wasn’t perfect: you’ll notice that the further from the center of the screen you look, the more distortion there is in the image. This is due to the lenses the Oculus uses to magnify the image on the screen, which create what is referred to as a pin-cushion distortion. This distortion pulls pixels in closer to the center of the screen, as seen in the image below. Fortunately, this is simple to counteract by rendering the images by creating an opposing distortion, called a barrel distortion, which pushes the pixels back out from the center of the screen. The result is that the final image viewed on the device has no apparent distortion.

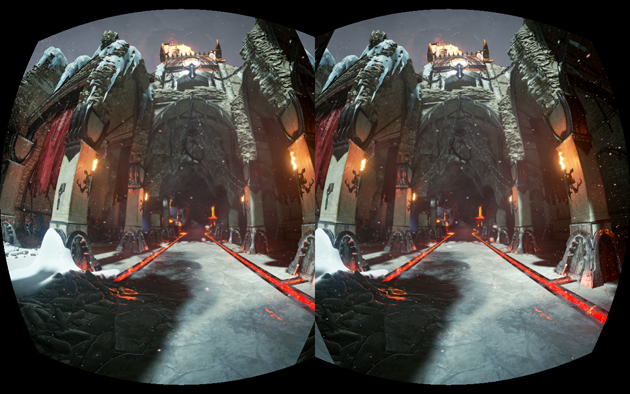

With the distortions applied, the in-game stereoscopic image above now becomes something like this when not viewed through the Rift:

When viewed on the Rift, the above image produces an undistorted image that covers our field-of-view, and looks correct. As with stereoscopic projection, the math on how to do this is out of the scope of this post, but suffice it to say that a simple few-line pixel shader in your post-process setup is all that is required. You simply read the distortion coefficients from the Rift’s hardware configuration, plug the values into a small equation, and you’re set!

One side effect of the barrel shader is that the image itself is shrunk during the distortion. If you run the distortion on a render target that is the size of the output buffer, you’ll notice that the visible image is considerably smaller. You can see the effect somewhat in the above image, where the black pixels surrounding the image represent where the shader was sampling from invalid pixel data outside the bounds of the original render target. To compensate for this shrinking, we actually render to a target that is larger than the final output target. That way, we fill the majority of the final render target with valid pixels, and the quality of the image is maintained.

With stereoscopic rendering and distortion in place, the last piece of the puzzle is to hook up the head position tracking, so that the in-game image is oriented the same as our real-world head.

Step 3: Adding in Motion

With rendering out of the way we need to read the orientation of the player’s head so that we can use that data to control the in-game camera. Because the Rift hardware does most of this for you, this last step is far easier than the previous two tasks.

The Rift determines the orientation of the head through a combination of multiple sensors on the device. The primary sensor is an gyro, which tracks angular velocity around each axis. The other two sensors, a magnetometer and an accelerometer, work to account for the minute amounts of error that accumulate from the gyro, known as drift error.

Fortunately, the majority of this work happens under the hood, as far as the engine is concerned. All we need to do is sample the final sensor orientation data when controller input is normally processed, and then update the game camera to match. It provides a good first pass, at which point you can put on the hardware and look around freely as if you were actually standing there in your game. It looks pretty cool, but we can do better.

With the above setup, when you move your head, you’ll notice something feels amiss. It’s very subtle, and nearly imperceptible, but something just feels not quite right. Some people, like me when I first tried it, don’t even notice anything is wrong at first. However, after playing around for a little while, the motion begins to feel “drifty” or “swimmy.” The culprit here is latency.

Latency is the enemy of immersion. At the most basic level, it is the amount of time that passes from when the sensor orientation is read to when the image is displayed on the device. It may seem trivial, but it results in feeling disconnected from the world you’re viewing.

Just as the Rift hardware is reading orientation in space, so is your body. If you remember your middle school biology class, your body senses its orientation largely via the vestibular system, which it then combines with visual input. That’s why even if you close your eyes, you can still tell your orientation in space. But, as anyone who has ever been dizzy and felt sick can tell you, when your visual system and vestibular system become out of synch, you don’t feel right. In fact, the worst case scenario for this is motion sickness. Thus, it is paramount that we make the visuals of the game match the real world orientation as much as possible to avoid this pitfall. Your brain is used to no input latency, and we need to come as close to that as possible.

To minimize this perceived latency, we end up reading the sensor orientation not once per frame, but twice. The first time we read the orientation is where we process the input from the normal sources, such as controls and the mouse. This allows us to orient for performing things like weapon traces in the world, which are dependent on view orientation. The second time is on the render thread, right as we calculate the final view matrix to use for rendering. We update the view matrix to adjust for any orientation change since our initial read, and send the final orientation off to be used for rendering the world.

This enables the final projected image to match the actual orientation of the device as closely as possible. It may not seem like much, but the few milliseconds of latency savings make a huge perceptual difference.

Final Polish

With the rendering system hooked up, and the motion controls as low-latency as we can make them, we can produce a pretty convincing VR interaction. However, it’s only the start of creating a truly great experience. The engine is only the base; we still need to make sure that the game itself is conducive to creating a fun experience. At a higher game level, there are a few techniques we use that really make it adapt well to the Rift:

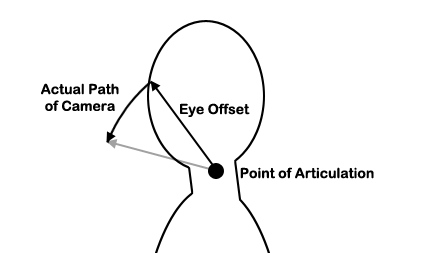

Adjusting for Eye Offsetting: Your eyes aren’t in the center of your head, rotating on a point. When you move your head around, your eyes are actually pivoting around your neck. So, when you look down, your eyes are actually travelling in an arc. Your brain is used to this offsetting, and so it is useful to model it by adding a simple vector offset from the view location to model the distance from the pivot in your neck to your eye. That way, the response in the game world feels more natural when we rotate based on the Rift orientation.

Disabling Motion Blur: While motion blur looks great on a screen from a distance, the effect breaks down when your eyes are close to the screen. Rather than looking natural, it muddies the image. We disable the effect by default when the Rift hardware is detected.

Disabling Depth-of-Field: The Rift has a great field of view on the device, so you can use your eyes to look around the environment while playing the game. As a result, your eyes tend to look at far more areas around the screen than in your standard living room set up, where you are generally more focused on the center of your screen at a distance. Depth-of-field effects generally assume the player is looking straight towards the center of the screen, and the blurring happens towards the periphery, away from the player’s focus. In a VR experience, this is often not the case! Standard depth-of-field effects often make much of the world look blurry for no good reason. Until we get pupil tracking in the hardware so we can tell where the player is looking, it is best to keep them off.

Picking the Right Art Style: This one is the more difficult nuances to master, but one of the most important. Because of the limited resolution of the screen and the proximity of your eyes, some art styles just don’t work well. We have found that cel-shaded style games with high-contrast edges don’t hold up as well as higher-frequency visuals, where the aliasing artifacts are hidden by the noise of the textures and shapes. Of course, this will change as the hardware evolves, but it is something to keep in mind when designing your experience.

What’s Next

Now that we have the hardware and engine ready, the final step is to create a compelling VR experience. This is an exciting time! We haven’t yet established all the paradigms and best practices, so it is a largely unexplored frontier. Someone needs to make the first Mario, the first Doom or the first Ultima Online to establish the archetypes that will inform design language in this new experience. The first step is integrating VR into existing games, but the most compelling experiences are yet to come, and will be built from the ground up to be an immersive experience. So, get out there and push some boundaries!

Special Thanks

I’d like to give special acknowledgement to the late Andrew Reisse from Oculus for his vital contributions to the Unreal Engine 4 integration with the Rift, and for teaching me an incredible amount about VR. The world has lost a truly gifted mind.

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like