Google's DeepMind artificial intelligence (AI) division has established a new research group to learn more about the ethical questions posed by the dawn of AI.

Google's DeepMind artificial intelligence (AI) division has established a new research group to learn more about the ethical questions posed by the dawn of AI.

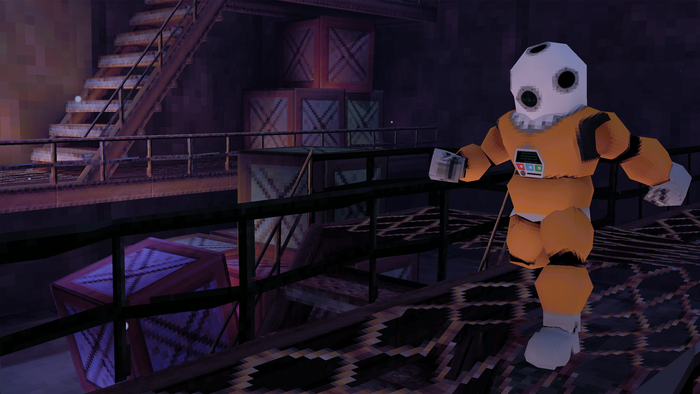

The British artificial intelligence outfit was acquired by Google in 2014, and often uses video games as part of its projects.

For instance, back in 2016 the company partnered with Blizzard to create an API tailored for research environments based in StarCraft II, and prior to that the DeepMind team developed an artificial agent capable of learning how to play Atari 2600 games from scratch.

Now, the DeepMind Ethics & Society unit hopes to unravel some of the biggest ethical quandaries posed by the creation of artificial intelligence to pave the way for "truly beneficial and responsible AI."

"We believe AI can be of extraordinary benefit to the world, but only if held to the highest ethical standards. Technology is not value neutral, and technologists must take responsibility for the ethical and social impact of their work," reads a blog post on the DeepMind website.

"The development of AI creates important and complex questions. Its impact on society -- and on all our lives -- is not something that should be left to chance. Beneficial outcomes and protections against harms must be actively fought for and built-in from the beginning. But in a field as complex as AI, this is easier said than done.

"As scientists developing AI technologies, we have a responsibility to conduct and support open research and investigation into the wider implications of our work. At DeepMind, we start from the premise that all AI applications should remain under meaningful human control, and be used for socially beneficial purposes."

DeepMind isn't the only institution asking looking into this area. Other research projects, such as Julia Angwin's study of racism in criminal justice algorithms, and Kate Crawford and Ryan Calo's examination of the broader consequences of AI for social systems, have also begun to peel back the curtain.

For DeepMind, the hope is that its new unit will achieve two primary aims: to help technologists puts ethics into practice when the time comes, and to ensure society is sufficiently prepared for the day AI becomes part of the wider world.

About the Author(s)

You May Also Like