Sponsored By

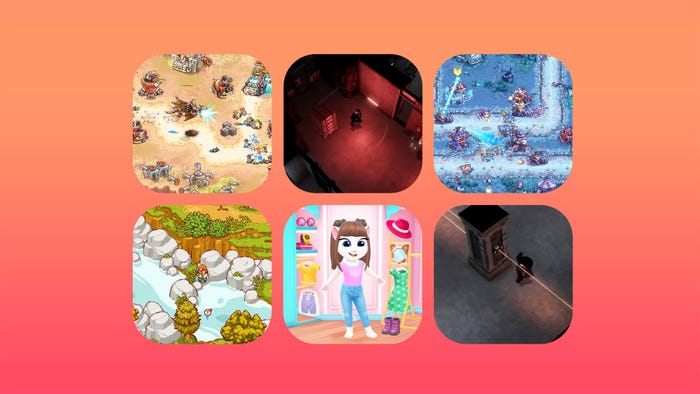

Screenshot of various games available on the Apple Arcade.

Business

Apple reaffirms faith in Arcade, says Vision Pro included in platform plansApple reaffirms faith in Arcade, says Vision Pro included in platform plans

After previous concerns about the Apple Arcade's future, senior director Alex Rofman assures the platform is going nowhere but up.

Daily news, dev blogs, and stories from Game Developer straight to your inbox