How long before devs will be able to create VR games using robust VR authoring tools? Gamasutra spoke to people working on the Unreal Engine VR Editor and Unity EditorVR to learn the answer.

Virtual reality games are enjoying a boom at the moment. Many developers, both AAA and indie, are exploring the possibilities of this exciting medium. But by and large, their immersive 3D worlds are being built with the same 2D tools that were used to create video games for flat monitors, TVs, and touchscreens.

Will devs one day create 3D worlds within a 3D workspace? Some game companies are currently trying to make that a reality. At the forefront are Unity and Epic, who both offer VR authoring tools that work with their existing game engines. The Unreal Engine VR Editor and Unity EditorVR are both currently available for users of those engines.

Why use VR design tools?

Unity Labs principal designer Timoni West believes using virtual reality to create video games is the natural next step. It will be more intuitive and accurate. It’s very difficult to describe 3D objects in 2D, she said, which is why developers rely on software like Blender or Maya. But with VR, devs can see 3D objects in their natural environment and manipulate them.

“Let’s say I want to draw a Christmas scene in VR,” West explained. “Right now, I have to set up the scene in Unity in 2D, use the grids to align things, constantly move the screen back and forth using the hot keys to pan, or other keys, to place things as precisely as the mouse and keyboard. And people get really good at this. They get really fast at it, but it takes years and years of practice and knowing how to translate what you’re trying to do in two dimensions across three dimensions. But if you just put on the headset and go to VR, you can decorate your Christmas tree just like you would in real life, and that’s a big advantage.”

Lauren Ridge is a technical writer for Epic Games who works on the Unreal Engine’s VR Editor. Over the past year, she’s collaborated with the team to add new features and improve user experience. Like West, she believes that seeing and manipulating objects in 3D can give game developers an edge when crafting virtual reality experiences.

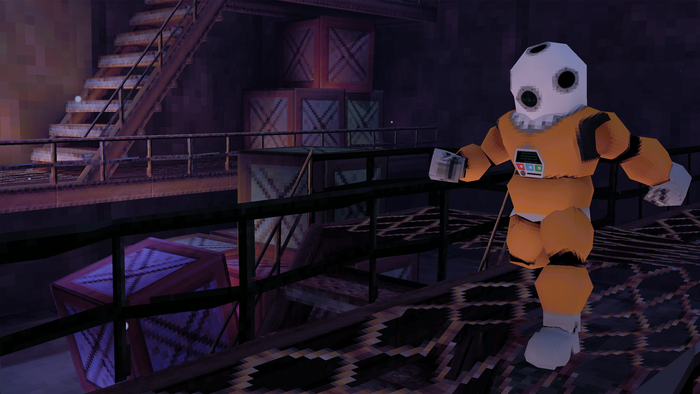

Epic's Lauren Ridge plays with the Unreal Engine VR Editor

“If you’re making a VR experience or a VR game, sometimes it’s hard to really feel if [objects] are right until you’re actually in the position of the VR player,” said Ridge. “So, maybe something is too close to you, maybe something’s not quite the right height for your reach, and being actually immersed in that environment let’s you tell that without having to iterate a lot between the desktop editor and playing the game in VR.”

Another potential benefit of VR editors is that they can make a developer’s work a little more fun. Although Ridge says her team isn’t trying to “gamify” game-making, she believes people will enjoy seeing their game worlds build up around them. “It really shifts your perspective from just looking into a window at it,” she said. “We have inertia on objects, so if you’re moving, say, a ball around the world, you can throw it with a gizmo and it actually has a little bit of physics to it. And things like that really give it more of an immersive, engaging feeling while you’re designing that way.”

What does game design with VR tools look like?

During the keynote address at Unite 2016 in Los Angeles, West took to the stage to show off how EditorVR works. (You can see it at the 1:48:00 point in this video.) Using Campo Santo’s first-person adventure Firewatch as an example, she used a Vive headset and controllers to easily and fluidly place objects, such as a coffee mug and typewriter, into the scene and change their positions and rotations.

.jpg/?width=646&auto=webp&quality=80&disable=upscale)

Fiddling around with Firewatch's level design in Unity's EditorVR

“We’re just trying to put as much of the functionality that already exists in Unity directly into VR so you can just do it all in there, and you don’t have to get out of your workflow or take off your headset,” says West.

Meanwhile, Epic co-founder Tim Sweeney himself has demoed how his company's VR editor can be used in a video:

It's still early days for VR design tools. Virtual reality itself is a new field, after all, and Epic Games and Unity acknowledge that they don’t have all the answers. Both Unreal Engine VR Editor and Unity EditorVR are open source, and are currently available on GitHub.

“We see people come up with cool solutions all the time, and we’re a relatively small team, and our goal is to further people making amazing 3D content,” says West. “So there’s no reason for us to keep that closed source and lock people into a tool that may not suit their needs. We really want people to come up with better solutions than we have, because we think that they will be focused on areas that we can’t focus on or we’ll never get to, and then we can take what they learned and hopefully bring that back into the EditorVR core itself.”

How to be prepared for the VR future of game design

Creating video games in virtual reality does have its drawbacks. It requires a fast, cutting edge computer; West recommends a GTX 1080 graphics card, one of the best GPUs currently available. Ridge points out, however, that if you’re creating a VR game, you likely have that kind of hardware already.

The physical movements required to manipulate objects in a virtual space are another potential obstacle. They’re not as efficient or precise as a mouse and keyboard. It’s difficult to type using motion controllers, and your gestures have to be broader.

“For that reason, we don’t anticipate anyone’s going to be coding in VR for quite some time,” said West. “We need tracked keyboards. We need 3D models that are fully rigged, and also the resolution of even the best VR headsets is not that great right now, and it’s actually very hard to read text in VR to this day, and will continue to be for quite some time.”

.jpg/?width=646&auto=webp&quality=80&disable=upscale)

The NumberPad feature in the Unreal Engine's VR Editor

West and Ridge both offer some advice for those who want to get more experience working with VR authoring tools.

“A lot of it’s just looking at the level design tutorials, which we have a lot of on YouTube, but also on the documentation site,” said Ridge. “Reading those tutorials, watching videos, tuning into the live streams, and just learning about game development in general will map really well, too.”

"Try everything you can on every platform,” West said. “Bug your friends with rigs. Cold-call people and ask if they can show you things. Pay attention to onboarding, button mappings, options, locomotion, sound design, selection types. Read up on ergonomics. Start thinking about why things are set up the way they are in the physical world. Are there natural constraints, like gravity, or are things genuinely easier to use than others, like keyboards vs. finger painting? Try to replicate the genuinely good, not just the physical standards."

About the Author(s)

You May Also Like