Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Normally, the Uncanny Valley theory is used to critique graphical realism in games, but it also applies to AI - in this Gamasutra feature, game designer David Hayward examines the fascinating fidelity issues, citing games from Valve's Half-Life 2 to the interactive drama Façade.

I ring the bell and Trip answers the door.

"How's it going asshole?", I ask, and instantly his face falls. My mouth opens and I feel a quick spike of guilt before telling myself he's not real. In silence, with a heartbreakingly sad look, Trip slowly shuts the door in my face. Well that was new. I reload.

I expected the AI to break, not look like a kicked puppy at the right moment. On each run through Façade it pushes back at me a little, marginally understanding a bit of what I'm doing and saying. It does feel broken, but within a small and repetitive setting it keeps on creating microscopic and novel bits of emotional engagement. All of those fall to experience eventually: my unconscious gradually catches up with my conscious knowledge, learning that Trip and Grace are things, not people.

The ability of things to fool us is often a question of resolution. Something that can fool you at a glance will not stand against a close look or a prolonged gaze. Spend long enough watching a magician doing the same trick, or see it from the right vantage, and eventually you'll unravel it.

This goes for CGI imagery of people, such as photo-realistic vector art or 3D models. What looks incredibly realistic at a distance may not under closer scrutiny. When we’re accustomed to or expectant of it, a lack of detail can be stylistic to the point of being painterly, but when unexpected it can pitch a nearly photographic representation headlong into the Uncanny Valley.

The valley has enjoyed widespread discussion in relation to the appearance of CGI humans over the past few years, and while thinking about it recently, the very worst moments of my social life flashed in front of me interlocked with thoughts of some of the best game AI. I now think that the uncanny valley applies to behavior too.

There's a small minority of people who are consistently strange in particular ways. You've probably met a few of them. Human though they are, interaction with them doesn't follow the usual dance of eye contact, facial expressions, intonations, gestures, conversational beats, and so forth. For most, it can be disconcerting to interact with such people. Often, it's not their fault, but even so the most extreme of them can seem spooky, and are sometimes half jokingly referred to as monstrous or robotic.

I don't mean to pick on them as a group; nearly all of us dip into such behavior sometimes, perhaps when we're upset, out of sorts, or drunk. Relative and variable as our social skills are, AI is nowhere near such a sophisticated level of interactive ability. It is, however, robotic. Monstrous and sometimes unintentionally comedic; the intersection of broken AI and spooky people is coming.

The problem is compounded by the fact that there's no way to abstract behavior or make it "cute". Cuteness is visual, so by rendering it as a cartoon even the repellent appearance of an ichor-dripping elder god can be offset. In a similar way, by its visual characteristics a Tickle Me Elmo doll pushes a lot of our "cute" buttons. However, when it's set on fire and continues to giggle, kick it's feet and shout "Stop! Stop! It tickles!" while it burns into a puddle of fuming goo, it seems horrific, profane and hilarious by turns.

The problem is compounded by the fact that there's no way to abstract behavior or make it "cute". Cuteness is visual, so by rendering it as a cartoon even the repellent appearance of an ichor-dripping elder god can be offset. In a similar way, by its visual characteristics a Tickle Me Elmo doll pushes a lot of our "cute" buttons. However, when it's set on fire and continues to giggle, kick it's feet and shout "Stop! Stop! It tickles!" while it burns into a puddle of fuming goo, it seems horrific, profane and hilarious by turns.

That’s programmed behavior pushed out of context, and the highly specialized fragments of AI currently integrated into video games easily break in the same way when they stray from their intended stages.

Strange or sick behavior can't be abstracted into a cuter, more appealing version of itself unless it's made burlesque, naive, or consequence free, and of course this would have drastic narrative effects. While a story can be told through any number of sensory aesthetics, behavior itself works through time, its meaning often independent of representation. That's extremely important for interactive media.

There are lots of things across all media that can already fool us. The crucial question, though, is how well do they do it? Distance and brevity obscure all manner of flaws, but at some point in a game, the player can always get closer or look for longer.

This applies to absolutely every aspect of simulation, but the aspects centered on other humans are critical. We're a very social species, and as a result large amounts of our cognitive resources are thrown into the assessment of other human beings. For instance, we show extraordinary specialization in recognizing, processing and categorizing the faces of other humans. We're acutely aware of whether or not other people are looking at us. We spend every second of interaction inferring the emotional state, values, and likely actions of others.

Of all the sensory data we deal with, other people are among the most relevant to our existence, so of course we have some highly specialized capacities to deal with it. Speech, movement, body language, behavior, and consistency of actions are all things we're well accustomed to.

That means people are much more difficult to simulate than rocks and trees, not just because of relative complexity, but because we're more wired to scrutinize our fellow humans. In film and real-time rendering alike, the plastic sheen of 90's CGI has given way to environments my unconscious mind doesn't balk at and just accepts even if not quite photoreal, but simulated people continue to pop out of them as fake.

Whether or not something is "realistic" is largely a red herring. The more important test is whether or not it's convincing, and I suspect behavior will prove to be a much bigger challenge than appearance.

Simulated appearance can be constructed from various elements that we are presently mastering. behavior is a complex, dynamic, context sensitive system that, in addition to dealing with immediate situations, can also operate informed by elaborate historical contexts and long term aims. Where actions and physicality are based on syntax, the behaviors underlying the vast scope of human actions, along with the limited repertoires imparted to AI, are often about meaning and have a rich undercurrent of semantic relations.

Real human behavior, for the most part, seamlessly elicits my empathy, and also tells me that, in turn, others understand and empathise with me. It also tends to demonstrate consistency, and at some point can generally be expected to explain any inconsistencies.

At best, such dynamics exist in a fragmented fashion if at all in game AI, which generally follows a very predictable cycle no matter how good it is: When it's new it may surprise me a few times with various tricks, and will tend to elicit empathy too, but every time a human seeming art asset or piece of behavior is instanced or recurs, my empathy diminishes. This continues until eventually I can let my Id go to town on NPCs without feeling bad. The greater the degree to which AI repeats itself, the more likely this result is.

Beyond patchy AI, the emotional engagement of a game is in the motivation I have to achieve goals, which are nothing but syntax. Games can and do rise above this. At present, there seem to be two ways in which they can use NPC behavior to drive emotionally engaging narrative and social interaction.

The first is traditional, non-interactive storytelling. By putting a game on rails or inserting huge cutscenes, a lot of traditional media techniques are of course open to game developers.

The second way is to use convincing fragments of interaction. This is more adaptive, but as yet not sustainable through time. For example, in F.E.A.R., at one point when I did particularly well at taking down a group of soldiers, the last one exclaimed "No fuckin' way!" just before I dispatched him. Though it was of course pre-recorded voice acting, the triggering of it was very well timed and created a brilliant moment, raising the game above the syntax of combat. In that instant the soldier was a character, not an entity.

Of course, any attempt to extend that into a conversation rather than a fight would, at present, break rapidly. This is exactly what happened repeatedly in Façade. No matter how many sad looks Trip shot at me, I'd always catch him doing something inhuman shortly after. Many game AIs have engaged and convinced me for a moment or two, but ultimately a five second Turing test isn't a very high benchmark.

As a result of this limit of game AI, I automatically assume it won't be convincing and forgive any errors it makes, such as running into things, repeating itself, taking unnaturally long pauses during conversation, and staring at me. Fragmented AI regularly communicates its inhumanity and punctures immersion.

However, it is becoming increasingly sophisticated, and that means that as it engages more of the parts of our brain used in socialization, it will pass a point where it will stop looking like good AI and start looking like bad acting or dysfunctional behavior. When interactive entertainment hits that point, it won't just be something we can laugh at like a B-movie, because it won't be a passive experience. It's going to be reaching out to us and pushing all the wrong buttons.

There are limited examples of it happening already. In Half Life 2, Alyx being programmed to look at the player while talking to them to create a sense of eye contact was a step above the previous generation of art and AI, but the illusion snapped when she was talking to me on a descending lift: Her eyes kept slowly rolling upward then flicking back down to me, because the point she was scripted to look at wasn't updating as fast as my location. If a real human did that near me, I'd be concerned for their well-being.

In that game, despite every emotionally convincing moment delivered by the combination of story telling, AI and art assets, it only took that one error to unhook a great big wedge of my empathy and make me laugh.

The closer a representation of a human is to reality, the slighter the flaws that can suddenly de-animate it. AI systems are rather fragile right now, whereas organic intelligence is decidedly robust, being able to operate in and adapt to a multitude of contexts.

We tend to take our own adaptivity for granted because it's such an everyday thing, and it's often the oddities of humans that make them more interesting and charming. Only certain subsets of characteristics make socialization more challenging, and even then it can be offensive to define them as flaws.

Sometimes it's just that people are a little de-socialized, but even so I think an important and much more formal connection between people and present level game AI can be found in psychiatry: the autism spectrum.

This spectrum is a psychiatric construct that defines various behavioral symptoms as disorders, varying in severity. Stated very simplistically, some positions on the spectrum involve enhanced specialization and lack of social ability, but it should be stressed that this is not a trade off.

The extraordinarily talented autistic savants sometimes paraded on TV and brought especially into public consciousness by the film Rain Man only comprise a fraction of autistic people. Also, while it is well known and obvious that they have limited social ability, an incredibly important component of autism is rarely discussed in popular culture: The ability of autistic people to understand the subjective viewpoints of others is drastically impaired.

To illustrate, an autistic child is shown a model in which person A puts an object away in front of person B, then leaves the room. Person B then takes the object and conceals it in a different location, then person A re-enters. If told that person A wants the object back and asked to show where he will go first to get it, an autistic child will likely point straight to the hiding place used by person B.

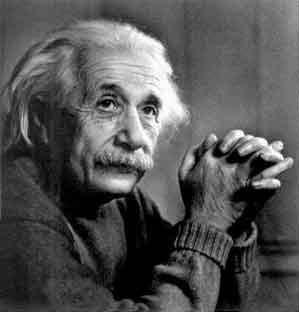

Asperger’s syndrome is a less severe part of the spectrum, in which people generally show some form of above normal mental ability coupled with somewhat obsessive interests, and are somewhat disconnected and uncomprehending of the emotions of those around them. It's sometimes claimed that Albert Einstein had Asperger’s.

In its high specialization and complete inability to understand people, game AI shows very similar symptoms to people on the autism spectrum. It doesn't really have a place on the spectrum itself though, because it breaks out of the far end, being so narrowly active and empathically blind as to be beyond autistic.

The more comprehensive it gets though, the less machine like it seems and the closer it comes to behaving like a particular subset of unusual human beings. Advanced AI will probably follow a reverse trajectory down the autism spectrum before it really fools us.

As a result, I suspect that consultation with and evaluation by psychology departments may become relevant to game AI in the coming years, given that they're the most comprehensive resource in existence on human behavior,

Psychology generally has a hard time, often being accused of unscientific practice, and psychiatry is also accused of prejudice in the way it defines certain things as disorders. Psychology has been through so many upheavals, and has so many schools and movements, that to even define it as a single thing can seem like a stretch sometimes.

Furthermore, despite over a century of study, much of the human mind and brain remain a black box to us. We see what is going on from the outside, but have so far had only limited ability to measure and peer into what's going on internally.

Furthermore, despite over a century of study, much of the human mind and brain remain a black box to us. We see what is going on from the outside, but have so far had only limited ability to measure and peer into what's going on internally.

This is where game developers are going to have some significant advantages over psychologists. If looked at from the point of view of hard data and proven theories, psychology is very difficult to penetrate.

However, where a scientist must test, measure, revise and prove things, game developers can simulate and create systems. Looked at in terms of unproven theories, psychology is a smorgasbord of ifs, maybes, and analytical skills rather than hard facts.

A lot of what we intuitively know about people remains immeasurable because of limitations on our technology and knowledge. For instance, the positive and negative valence of emotional states in others is obvious to most people through facial expression, voice intonation, posture, and so forth, yet none of these constitute an impossible to fake, objectively reliable measure, and magnetic resonance imaging has not yet reached a fine enough resolution to allow sufficient neurological observation.

While reliable enough for everyday interaction, the signs we read by second nature are not absolute. It is our unconscious knowledge of how humans behave that enables us to pick out the good fakes, and bringing that knowledge to light will take a lot of study and analysis.

Comprehensive knowledge of the mechanics upon which human behavior operates is a tall order, but luckily, while still a mountain we're yet to scale, a well informed AI performance is not so ambitious. By building towards more convincing AI, game developers are not becoming scientists, merely better magicians.

Read more about:

FeaturesYou May Also Like