Gamasutra chats up the CEO of nDreams, the once-major PlayStation Home studio now embracing multi-platform VR development, for advice on what game devs should watch out for when taking the VR plunge.

The ins and outs of designing virtual reality games is fascinating because it's so new -- we're watching developers figure out what works and what doesn't right before our eyes.

One experienced VR studio is nDreams, the U.K.-based game company founded by Patrick O'Luanaigh [pictured] after he left Eidos in 2006. For a long time, the company's focus was on making and selling PlayStation Home content, but after getting early looks at the Oculus Rift and what would become Sony's Project Morpheus VR headset, nDreams decided to pivot from virtual worlds to virtual reality games.

"Basically, we fell in love with VR," O'Luanaigh tells me. "We switched to outsource all of the [PlayStation Home] art work using external devs, and we grew our internal team using the money we were making and basically did lots of experimenting and prototyping in VR."

In the first six months he estimates nDreams did 40 different experiments to see how different game design tropes feel in VR. What happens if your avatar in a game is wearing glasses, for example; is it a good idea to render the rims around your eyes?

What happens if you breath in cold air and you can see the frost in front of you, how does that feel in VR? What happens if you walk up against a wall with positional head tracking enabled? "We did lots and lots of weird experiments to figure out what worked well and what didn’t work well," admits O'Luanaigh, with a laugh.

But now the steady flow of Home money to fund experiments has dried up; O'Luanaigh says the virtual world is "actually shut down in terms of revenue now," which has led nDreams to raise $2.75 million in funding from investor Mercia Technologies to focus on VR development.

With a pair of VR releases under its belt and more to come, we chatted with the CEO of nDreams to get some advice on what other developers should watch out for when taking the VR plunge.

Making a first-person VR game is harder than it might seem

When they began building VR game prototypes, O’Luanaigh says the team at nDreams learned some basic lessons very quickly.

Common game design shibboleths like cutscenes don’t really work in VR, for example -- automatic camera movement is a recipe for nausea when the player’s head is standing in for the camera.

Even small movements like first-person head bob can cause problems. “We had some head bob in first-person prototypes and when you stick even that little bit of movement in VR, oh my word that’s horrible,” says O’Luanaigh. “You have to be very careful about doing any kind of camera movement; you really have to leave it up to the player.”

"You have to be very careful about doing any kind of camera movement; you really have to leave it up to the player."

You also have to rethink how you allow players to move through your game. You need to pace things for actual humans, instead of superhuman video game protagonists.

“If you look at the run speed in Call of Duty, you’re sprinting not far off Usain Bolt speeds and you’ve got this giant backpack on,” says O’Luanaigh. “And then you turn around and you’re completely rotating three times a second, a stupidly fast rotation speed, so you can whip around and shoot lots of people.”

The nDreams chief admits that works very well on a console, but causes no end of trouble when applied to a VR game.

“Because your player feels presence and believes they’re there, you have to limit things to what feels comfortable in real life,” adds O’Luanaigh. “In real life, you’ll rotate one complete turn maybe...every couple of seconds? Any faster and you start to feel dizzy; the same is true in VR.”

Third-person cameras work better than you think

O’Luanaigh notes that he and his fellow developers have found certain types of third-person cameras work “surprisingly well” for VR game development.

“We went into VR assuming it had to be first-person and that was it,” says O’Luanaigh. “But the more we played around with third-person cameras -- almost like god-style cameras, the kind of things you see in an RTS -- the more we realized they can work fantastically well in VR.”

But you have to restrict their movements to avoid inducing the same sort of motion sickness caused by jerky first-person cameras. The two types of third-person cameras nDreams developers prefer are fixed-distance setups (where the camera hovers a fixed distance and angle above the playfield, a la the original Diablo) and setups that afford the player full control over moving a floating camera around a discrete game space -- like the diorama-esque worlds of Populous or The Sims, for example.

The former works well because, according to O’Luanaigh, “the camera is never swooping in or trying to rotate behind the character,” which minimizes motion sickness from automated camera movement. As for the latter, well, the appeal seems obvious -- your player puts on a VR headset and effectively sticks their head inside your game world, allowing them to naturally move their head using positional tracking to get in closer and see what they’re doing.

“That works fantastically well,” says O’Luanaigh,” because there’s no automated camera movement at all; it’s literally just your head looking down, looking forward and rotating around.”

You have to totally rethink controls and UI

Controls are a real challenge for VR game developers at the moment, in part because they have to be intuitive (players can't see their hands, after all) but mostly because it's not always clear what your players will be holding when they boot up your game.

"Particularly on the Oculus at the moment, because there’s no default controller you have to just sort of hope people have a gamepad," says O’Luanaigh. "If you start using the keyboard or mouse, it can be quite tricky with the headset on. It’s much easier on Morpheus, because of the DualShock 4."

You also have to forget most of what you know about good user interface design.

"The onscreen interface is really difficult in VR, and very challenging, because you don’t really have a 'front' anywhere," says O’Luanaigh. Trying to sort of "float" your user interface in front of the player's eyes doesn't work very well, so O’Luanaigh says nDreams developers "tend to put the GUI onto a 3D object like a watch, or something like that; something your character is carrying."

Oculus and Morpheus: the same, but different

After a profitable run as a Home developer, nDreams is working on projects across multiple VR platforms -- including the Oculus Rift and Sony's upcoming "Project Morpheus" VR headset. Close ties with Sony afforded nDreams the opportunity to start working with Morpheus hardware pretty early, but O’Luanaigh says developers shouldn't worry about major differences between the two headsets.

"Power-wise and in terms of performance, they’re not too dissimilar," he says. "So we’re able to make the same games across both headsets, with a few tiny differences."

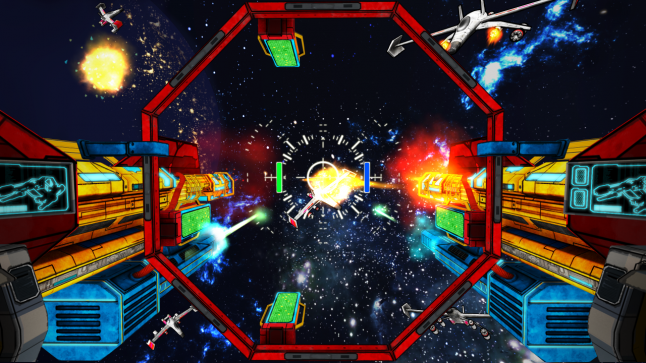

The studio is currently using Unity to develop mobile VR experiences (including Gunner, pictured below) for headsets like Samsung's Gear VR, and Unreal for its PC and PlayStation 4 VR work.

"The Oculus’ biggest strength is that it’s open -- anybody can get hold of it very cheaply, and it’s very easy to hack and get all sorts of rough stuff up and running," says O’Luanaigh. "It’s a really easy, accessible VR headset."

The biggest downsides to developing for the Rift, he admits, include its lack of default controller and its demand for a PC capable of running games well enough to avoid making players ill.

"It’s a great piece of kit, but you do need a powerful PC to run the Oculus," says the nDreams CEO. "My recommendation for devs would be to get it working with a controller, and maybe look at the Leap Motion -- this great little PC device that tracks your hands."

O’Luanaigh believes that Oculus VR will debut a default Rift control system at some point, and that it's quite likely -- based on the companies they've acquired -- that it will incorporate gesture controls in some fashion.

"So you can play around with that now with a Leap, and see if you can get it working for your game," he recommends.

As far as Sony's headset goes, the verdict seems to be that it's trickier to get your hands on but more accessible to your players.

"You do need to be a PlayStation developer, so there’s a few more hoops to jump through: it’s more epensive, you have to get the kit and stuff," says O’Luanaigh. "But Unreal works really nicely on it, and it’s a lovely piece of hardware as well. It’s very similar to the Oculus, actually -- both have positional tracking, both have great screens, both run Unreal very nicely. To be honest with you, there’s not a lot of difference between them."

However, O’Luanaigh adds that Morpheus has a significant advantage over Oculus in the form of the DualShock 4 controller.

"That has positional tracking and rotation kind of built in. It’s a very nice default controller that you know everyone will have," he says. "And also, you know every PS4 user will be able to use the Morpheus, while you can’t guarantee that every PC user will be able to use the Oculus due to performance requirements."

About the Author(s)

You May Also Like