Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs.

Lots of games have implemented a "morality system", but these often leave players underwhelmed. A random insight from a match-3 game suggests a reason why, and an approach to solving the problem.

This post is adapted from a previous blog post.

TL;DR - Games suck at morality, but are hindered because they can't read the player's mind.

The Inciting Incident

The other day I was playing a game called "Chuzzle". It's yet another match-3 game that goes out of its way to make the whole experience... cute. You're matching little furry tribble-like creatures that watch the mouse cursor, and squeak happily when they're matched.

Chuzzle screenshot

Occasionally, however, you get a not-so-happy squeak. This is the game trying to tell you you've made a bad move. I had pondered for a while about exactly how it decides that a move is "bad", as - from my perspective - it wasn't. That day I suddenly realised the reason for the dissonance: I was evaluating moves retrospectively (i.e. based on their outcomes) whereas the game had to evaluate based on the state of the board at the time. Now, you'd think this wouldn't be a major epiphany; most would probably go "Oh, that's what it is" and carry on with their day. For me, this forged rapid mental connections to other ideas I'd had in the back of my mind for some time, leading to much ramblings and discussions. Rose Red laughed at me; apparently only I could find something deep and thought-provoking in Chuzzle.

Karma

A lot of computer games, predominantly RPGs, have what's sometimes referred to as a "karma meter" (see tvtropes if this isn't enough reading already). This is probably inspired by the Dungeons & Dragons alignment system, which represents a useful (and not overly complex) categorisation of different moral perspectives. It has two axes:

Lawful—Neutral—Chaotic (does the character follow the rules)

Good—Neutral—Evil (is the character virtuous)

The drawback to D&D's system is that it's a player-driven labelling tool - a player decides that the character they are creating will be "Lawful Good" (for example) - it's not a property that develops through the course of the game depending on what the character ends up doing (though the other players will probably complain if the character is not behaving according to their alignment).

Measuring Morality

The most basic way a game could track changing morality is with a single number representing "goodness". When you do something "good", it goes up; when you do something "evil", it goes down. Alert readers may already have noticed the problem with this: picking up lots of litter will cancel out burning down an orphanage. To get around this, you therefore need to have two numbers; one representing "good" and one representing "evil". This allows for a much richer and more realistic assessment of a character's morals. At the very least, the game should be able to recognise four different states:

Low "Good"/Low "Evil": the player is neutral

High "Good"/Low "Evil": the player is virtuous

Low "Good"/High "Evil": the player is dastardly

High "Good"/High "Evil": the player is amoral

Sadly, as anyone who has played any of these sort of games will probably have noticed, most games really only recognise the second and third states (the first state exists by default, in that it's typically where the character starts, but there's usually only a "good" ending and an "evil" ending - see No Points for Neutrality on tvtropes.org).

By Whose Law?

So, it shouldn't be too hard for games to do better than they are, but this isn't the end of the story. You may have noted that I've been referring to "good" and "evil" in quotation marks. This is because we've been talking about a single axis, instead of the two mentioned earlier. To qualify something as "good" or "evil", you must be clear what system of measurement you are using. "Legal" is not necessarily the same as "moral". How many times has a major plot point of a book or movie been a character disobeying an order/breaking the law to do something they believe is right? (NB: please don't take this to mean that I'm advocating moral relativism. I'm just noting that people can have different priorities when it comes to virtues.)

Nuance and Conflict

Another feature that generally isn't well-implemented in games is presenting the player with a true moral dilemma. Most of the times that a player is given an option between two (or more) actions, it is clear that one is the "good" option and another is the "evil" option. This is a moral choice. By contrast, a moral dilemma is when you are faced with - for example - trying to choose the lesser of two evils. If you know which choice leads to the result you want, it's not a dilemma.

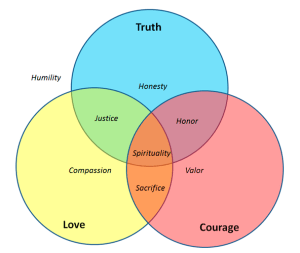

Similarly, there is more than one virtue. One game that recognised this (I haven't played it, so I don't know how good a job they did) is Ultima IV. It had eight virtues the player's character was trying to cultivate, and situations presented with those virtues in conflict (e.g. a friend takes credit for your accomplishment. Should you correct them - Honesty - or let them have the glory - Humility). Depending on how realistic the scenarios were, this could make for some very interesting dilemmas.

ultimaIV

From crpgaddict.blogspot.co.nz

Intention, Outcome, Reaction

So how does this all relate to my Chuzzle epiphany that began this big wall o' text? Well, sadly despite the possibilities inherent in the ideas I've described above, games are knobbled before they've started the race. Why, you may ask? Because, like Chuzzle, they can only judge actions based on a limited amount of information. Step into the - hypothetical - real world for a moment. Think about the different scenarios of a murder trial. The defendant could be found:

Not guilty - they neither attempted nor succeeded in killing the victim

Guilty of manslaughter - they did not intend to kill the victim, but did cause their death

Guilty of murder - they deliberately killed the victim

Guilty of attempted murder - they tried, but did not succeed

The moral judgement the judge and/or jury make on the defendant will vary depending on their [the defendant's] Intentions, and the Outcome of their actions. As some friends pointed out, their response is also important. Their Reaction (e.g. remorse or lack thereof) to the victim's death (or survival, in the case of attempted murder) gives some indication as to their intention, and likely also affects the severity of the sentence given.

People are, generally speaking, reasonably good at assessing the motivations for someone else's actions, and whether or not that person regrets their actions (aside from the Fundamental Attribution Error and similar cognitive biases). Computers, on the other hand, are not. Usually, they lack even the necessary perspective to attempt to make such assessments (as they cannot see the person's face, cannot hear their tone of voice, etc). That means any game (barring drastic and unlikely advances in the field of Artificial Intelligence) is reduced to making judgements purely based on Actions (i.e. is this act fundamentally "good"/"evil" regardless of circumstance) and Outcomes.

How much does this matter? Well, let me present you with an example. Let's say that a player wants to enter a castle, but there is a guard at the gate, and the player does not know the password. It's not infeasible that slaughtering the guard and looting their corpse for the key would be considered "evil", and would score the player's character a significant number of "evil" points. The only way the game can determine if this has happened or not is to record if the guard is dead, and whether the player chose to attack the guard. So let's consider some other actions. Attacking a guard is "evil", but attacking an ogre wouldn't be (unless of course the game is trying to make a point about bigotry or something). Choosing to run away from an angry ogre isn't "evil", merely good sense. Running past a guard isn't "evil" (though may give a minor penalty if you bump into them). Is it your fault that the angry and short-sighted ogre chasing you happened to take it out on the nearest available human (i.e. the guard)? The point is that cunning players will find a way to achieve their nefarious ends without doing anything that is obviously "evil" (and computers are not good at dealing with things that aren't obvious). Alternatively, if you've set up a nice moral dilemma, the player may choose to do something "evil" to achieve something "good" - how should the action be remembered?

Conclusion

Essentially, computers are not very good at measuring morality. I don't know the best solution to this problem, I'm just presenting a different perspective (solving a problem is easier if you have a clear idea of its parameters). We can do better, but we'll have to be very careful about setting up moral quandaries for the player, and measuring the right elements to ensure the player is fairly rewarded/punished for their actions. As a non-moral example, if the goal is to get inside the castle, you should measure this by testing if the player is inside the castle, not if the player has unlocked the castle gate (this is a Twinkie Denial Condition from way back).

Afterthought

I realise that such features make a game more complicated, and more realistic, but they don't necessarily make it more fun. However, despite what some people may argue, fun is not the primary focus. Not all movies are fun, but all successful ones are engaging in some way or another. One might say that frustration isn't fun, yet games can become boring if they are too easy. It's not the mechanism that makes something work, it's the way it is used to create the overall experience (*waves hands madly and holistically*).

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like