Developers with Playful Corporation, Carbon Games, Minority Media, and Cloudhead Games tell us how they surmounted unexpected design difficulties in their VR game projects.

The good news about virtual reality: It's a fresh new medium, and you can break new ground in your game design from day one. The bad news about virtual reality: you're just as liable to break your game as you are to break new ground.

For developers looking to avoid the former on the way to the latter, we checked in with VR developers Paul Bettner of Playful Corporation, James Green of Carbon Games Inc, Dan Junger and Joel Green of Cloudhead Games, and Patt Harris of Minority Media, all to give you some best practices for bringing your audience into your digital worlds.

Problem Area One: scale, detail and distance

To kick things off, Playful Corporation CEO Paul Bettner, whose game Lucky’s Tale is being published by Oculus as a launch title, advises developers to adopt an open-ended mindset when tackling problems in VR. “When you start prototyping in VR and your idea doesn’t immediately work just right, you can quickly either commit to bad ideas, or abandon the project entirely,” he says.

“We found that if we put our baggage aside, and play with scale, lighting, look, and graphics, we can find something new that works well in VR that we could have never built for traditional TV monitors."

As one of the first games experimenting with third person cameras in VR to recreate the mascot 3D platformer, Bettner says he applied his own advice. “This is where scale comes in. If I tell an artist to make a character like Mario of Super Mario 64, they give me a character that’s 1.5 units high. That’s how our hero, Lucky, came out in the beginning.”

Bettner laughs at the result of that decision. “Put on the headset though, and it turns out none of us think Mario is this big---he’s as tall as a person, when he should be as tall as a water bottle.”

The fix was to downsize Lucky and consistently position him such that it it felt like he was approximately an arm’s length away from the player, creating kind of a toybox effect as though the player were moving Lucky across a diorama. “This spilled out into how we designed our art assets," he says. "If we take our original high poly assets, put those in the game with no texture maps at all, that feels so much better than with texture maps.”

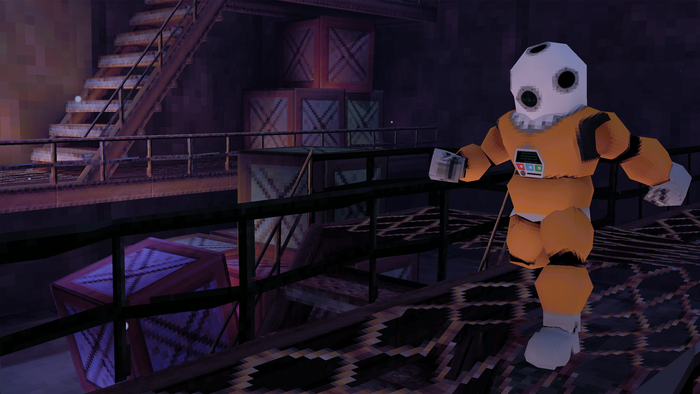

Carbon Games’ James Green agrees with the notion of throwing out texture maps and specular, even if he’s inclined to suggest low-poly assets instead of high ones. “You don’t want to use too many old tricks. Things like specular, texture mapping, bump mapping, they don’t work well in VR because you can’t render them stereoscopically. We use hand-painted textures to help hone in on our tabletop aesthetic. So don’t waste your time with normal maps, add a few polygons if you really need to pick up the edge somewhere.”

Problem Area Two: UI and menus

Green’s other big challenge in translating Airmech to Airmech VR from the ground up has been UI design. “The biggest lesson I’ve learned is keep it simple,” says Green. “You can’t just draw text on screen anymore like you used to be able to."

He recommends that you take advantage of that other kind of peripheral--peripheral vision. "One of the things we do is have supplemental menus come up at the side of your view, so you see it at the corner of your eye.”:

“We don’t throw it in the player’s face, because we wanted to take advantage of natural behaviors, like how you might react if I came up and tapped you on the shoulder. I wouldn’t jump in your face, I’d try to get your attention, so you could choose whether to deal with me, or the car you’re about to step into the street.”

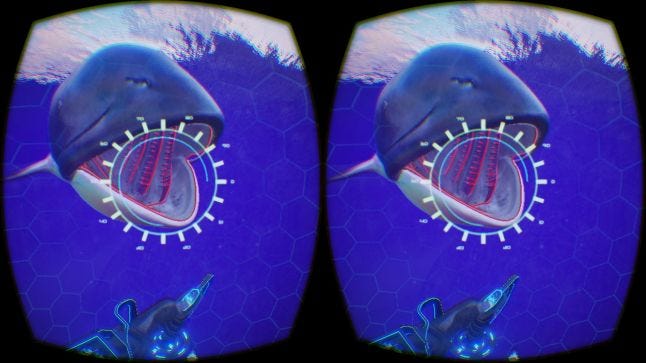

Also dealing with special menu and UI challenges is Pat Harris, working on Minority Media’s Time Machine VR. Along with reiterating the crosshair failure story he told at Oculus Connect, Harris specified a need to source them to some kind of physical UI the character interacts with as well as the player. “In VR there is no corner of the screen to place those menus or other UI bits on, so locking any floating orientation can be disorienting.”

The result for him is the time capsule that the player floats around in while journeying underwater. If you choose to mimic this model, just get ready to deal with the challenges of text sizes---Harris says higher resolution VR devices will help fix this, but if text shows up more than 20-30 feet away from the player, it won’t be immediately readable.

Problem Area Three: Motion sickness

Motion sickness in a platformer on high seas is bad enough. Harris thinks motion sickness while underwater sucks even worse. With Time Machine VR, Harris says choosing a setting to match their movement goals helped them find reasonable physical movement to ease players into VR with. Harris says, “We wanted to make sure we had smooth movement that didn’t make people sick, so moving the player around in the cockpit of an underwater time machine let us create motion that wasn’t at risk of being jerky or strainful.”

Harris echoes James Chung’s VR observations about needing to keep the game running around 90 frames per second. He adds that special care is needed here because latency or performance jitters of any kind at this frame rate have triggered motion sickness for Harris’ testers, and he’s noticed once that sickness starts, it doesn’t taper off until they take off the VR device remains on their head.

If you’re working on the HTC Vive, or any other VR platform encouraging physical player movement with external cameras, motion sickness is going to be a problem there too. You also have to watch out for vection--when another object's motion gives you the illusion that you're moving. (Imagine sitting in a train that isn't moving, and the sight of another train passing on another track gives you the sense that the train you are in is moving.)

Creative director Dan Junger and producer Joel Green at Cloudhead Games just made a big decision to kill vection and artificial acceleration at all costs on The Gallery: Call of the Starseed. “Rotational vection is just terrible," says Junger. "Before we were on the Vive and using traditional controllers, we tried making a ‘VR comfort mode,’ to reduce what happens when you turn the camera with the right stick."

Once they got on the Vive, dealing with the 90 frames per second required on the device demanded they just throw all artificial acceleration out the window. The Gallery uses the Vive’s artificial volume the digital volume created by the Vive’s physical cameras that let players walk around and view environments from multiple angles, then introduces other techniques that began to evolve their whole design plan for the game.

Problem Area Four: Navigation environments and making them feel full

Leaving the struggles of motion sickness under the high seas, Junger and Green explain new ways to think about navigating large spaces in VR using HTC Vive. For them, the Vive’s biggest design challenges aren’t necessarily in the touch controllers, they’re in the aforementioned artificial volume.

Junger says even though this platform adds realistic player movement, it reduces how far they can walk in your game world before hitting a real wall or the natural edge of the roomscale. Green gets excited though, when explaining that developers can jump over this hurdle by reaching back to classic games and their limitations. For them, the navigation answer lay in Myst.

“Myst was one of the tonal inspirations for the game,” says Junger. “We looked to it again to solve the locomotion challenge by teaching players to teleport from roomscale to roomscale with the press of a button.” Much like Myst’s pre-loaded rooms that players click, a flick of the big left button on the HTC Vive controller gently teleports the player from where they’re standing to where they’re looking.

This solves a big navigation problem for games designed around the HTC Vive, and lets Junger and Green create a slower experience to let players poke around their mystery-driven story game. Of course, once you decide to let players poke around, you need to check your environments to see if you’ve accounted for everything they’ll want to interact with. “There’s a real sense in VR when people find the light in the smallest things,” says Green.

Junger adds, “for that kind of design, plan to spend a lot of development time accounting for everything players expect to happen when they grab something.”

It’s something Harris deals with on Time Machine VR as well, and mentions this kind of design will struggle with your need to maintain 90FPS. “The player can get close with almost every element in the game,” he says, “so you can’t cheap out. If things get blurry and ugly, it breaks immersion, and that’s tough to balance against 90 FPS performance.”

Pick Your Poison

For all the solutions developers have discussed for dealing with VR, James Green points out that no matter what kind of project you tackle, you’re going to have Everest-sized challenges. So no matter how your arm yourself, he just generally advises you be very specific with your goals. “I’m not saying ‘take the easy way out,’ I’m saying ‘choose which hard problems you want to solve. Is it making the game, or is it solving VR challenges no one’s solved yet?’

“You’ll be a hero if you do the latter,” he says with a chuckle

About the Author(s)

You May Also Like