Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs.

As a follow-up to "Grasping the Client-to-Cloud Revolution", Neil Schneider discusses the innovations and market breakthroughs he sees in store for content creators and technology makers in this developing next era of computing.

Last month, I shared an article entitled “Grasping the Client-to-Cloud Revolution”. Using products like Google Stadia as a backdrop, along with other market developments like Sony and Xbox partnering on cloud streaming technology, and impending connectivity advancements like 5G, I discussed where the next era of computing is heading. Referred to as the Client-to-Cloud Revolution, the concept of computing is breaking out of the confines of what machinery is built with at the point of purchase. We are now moving into an enhanced world where all our devices could be instilled with the power of supercomputers. More importantly, it’s envisioned that this will be a collaborative computing model where there will be capabilities even if cloud connectivity is unavailable.

The previous article primarily focused on the user and consumer experiences: better access, more affordability for that access, and an all-around superior experience to what is readily available today. More importantly, this accessibility will be very portable and the computing experiences we value most will be transferrable place to place without having to sacrifice much in the way of quality or effectiveness. This is just part of the story. The other half is what this could ultimately mean for content creators, application makers, hardware vendors, and ecosystems that have endured somewhat consistent business models for decades.

I will open by crediting Google Stadia’s partner program for successfully welcoming 4,000 applications by future developers. Google’s response was to encourage creators to put thought into what could be achieved with this newfound portability and distribution through multiple end-points at once; I refer to the end-points as “computing platforms”. I think Google’s recommendation is a bold start, and as this Client-to-Cloud Revolution develops, I’m looking forward to even more expansive opportunities and benefits worthy of the next era of computing across a wide range of products and services and use cases. Allow me to explain!

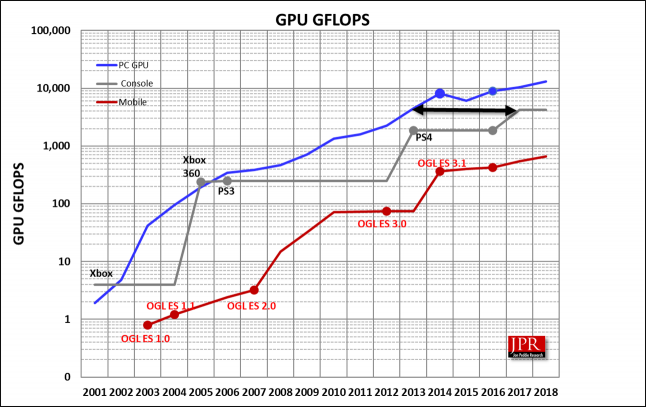

How Computing Platforms Fare Over Time in Terms of GPU GFLOPS

SOURCE: Jon Peddie Research

In the present day, content and application makers have always thought in terms of what hardware is out there and what that hardware is capable of. For argument’s sake, if I’m in game development and I want to create for a popular gaming console, I would have a very regimented outlook on how much I could deliver on my vision based on what the equipment is capable of. While I may have very beautiful game features in mind, the tradeoffs immediately come into play because that visual functionality could have steep performance costs.

Invincible Tiger: Legend of Hand Tao (note the doubled view for 3D compatibility)

To help prove this point, thinking back at the stereoscopic 3D gaming days, it was a major hurdle to prove that these titles could be run on a console versus being relegated to just the PC markets because the content required 30% to 50% more processing power to account for the second rendered camera view. Blitz Games Studios’ Invincible Tiger: Legend of Han Tao and Ubisoft’s Avatar games were valid proofs of concept on both the Sony Playstation 3 and Microsoft’s Xbox 360; yet the visual tradeoffs required to make the games work were very clear. Modern virtual reality games also need to make tradeoffs because instead of the console being free to process 100% of its resources for a traditional 2D display, VR is far more stringent on the visual performance required for the experiences to be playable (and keep the nausea far away!).

PC has similar challenges too. Even though AMD, Intel, and Nvidia continue to crank out graphics cards and processors that consistently impress with their rocket-powered performance, content creators are wise to support a much wider gamut of desktop and laptop computers that feature low to mid-range components to maximize their sales. In fact, it continues to be a challenge for PC gamers that AAA developers primarily code for the gaming console first, and design for the higher performing PC desktop options later on. For sure, there are enhancements to be had like high dynamic range lighting, ray tracing, and all kinds of graphics filters…but are these options part of the artistic process, or just throw-ins to complete the platform offering? I would like to live in a world where all choices are part of the artistic process from the beginning.

The mobile platforms like smartphones and tablets have processing power that is at least ten years behind anything you can do on PC. In theory, the biggest distribution of meaningful computing power our world has ever known is only now capable of rendering Call of Duty and maybe Borderlands. This isn’t even factoring the storage costs for the content and the battery life needed to sustain the horsepower. Of course, there is a wide range of capabilities in mobile as well; I would venture that most smartphone owners still don’t have the processing power to attain the AAA gameplay we remember on PC and other platforms as much as ten years ago!

Now what if we took all those limits away? What if a content creator could code once, have much of the rendering taken care of by one or more supercomputers, and have the user experience automatically delivered in the format that will provide the best experience for the user wherever they are and on whatever platform they are running on? Thinking from the point of view of artists that want to inspire and envelop those that experience their work – what would they do with immense powers like that? I’m thinking a whole lot more than what we are seeing and experiencing today.

I firmly believe that the content creation business – perhaps the whole content creation business – is going to dramatically change its thinking process in terms of what they can do and how they can do it.

So what of the hardware? Where do graphics cards and CPUs and peripherals live in this Client-to-Cloud Revolution? Where is the growth potential?

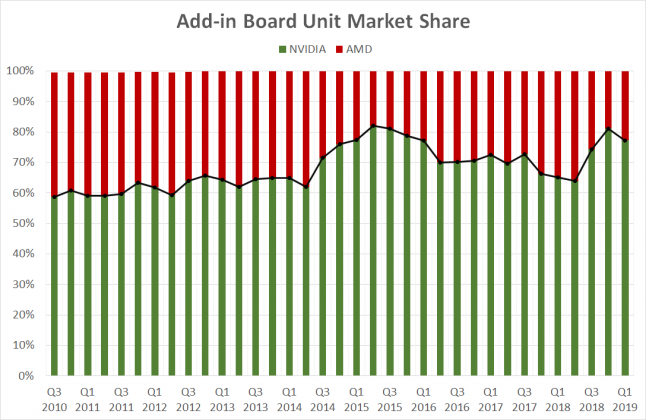

Last week, I saw a graph put out by Jon Peddie Research comparing the AMD and Nvidia footprint in the PC graphics card market, and it really got me thinking. In the consumer and workstation markets, graphics cards are 100% dependent on how many PCs exist in the world because PC is the home they live in. In rare cases, there could be two graphics cards in a single PC for technologies like Nvidia’s SLI or AMD’s Crossfire – again, very rare. This means that no matter how the graphics card makers divide their sales footprint, it borders on being a zero-sum game because they are forever bottlenecked by the number of qualified PCs sold within a given time.

Dreamworks and Jon Peddie Research Discuss Benefits of Virtualization in Cloud Computing

Then I thought of a presentation I saw put on by Dreamworks and Intel a few years ago at SIGGRAPH. They explained that through the power of cloud computing (or more precisely "virtualization" within cloud computing), artists could collaborate and render from anywhere in the world to make a tent pole Hollywood animated feature. The message wasn’t so much about how fast each processor was. What was more important was that the processing is scalable and that the individual cores weren’t quite as important as the whole. I was impressed. Fast forward to the present day, and this Client-to-Cloud Revolution is introducing the opportunity for users to experience the same level of performance in real-time right to their personal computing devices and displays.

Reflecting on where things are heading in computing, Jon Peddie Research predicted a few months ago that the client-to-cloud revolution would double the time it takes from two years to four years for the processing industry to shrink their transistors (i.e. Moore's Law is no longer helpful). For a time I agreed, and then it hit me. Provided these new lines of product sell, the nature of the Client-to-Cloud Revolution is going to force innovation far more than realized. The reason is the growth will be less about keeping up with the Joneses or the pressures of having an exciting new offering to market to the same audiences over and over. This model will instead be driven by the very demanding requirement of getting enough horsepower out to users and their growing appetites for more and more.

Think about it! First, there needs to be enough processors to meet the needs of a growing user base. I say growing because I believe this Client-to-Cloud Revolution is going to attract new audiences the markets didn’t have access to before. There will also be fierce competition to earn the users’ mind share on which service(s) provide the best experiences for the money. Solution? You need more processors in the supercomputer to scale for the quantity of users and the delivered quality expectations. Easy to add - done - right? WRONG. You need more space! You need more cooling! You need more electricity! There are real costs and limitations for all of that. Solution? Shrunken dies, more efficiency, reduced space requirements to achieve the same thing…to achieve more? I think there will be market pressures to achieve more.

So when I look at a graph like the above, and even factoring in existing cloud computing sales, I’m envisioning multiples in hardware sales and potential hardware sales as well as regular pressures to make better products on a regular basis. How else can it go in a demanding content-hungry world such as this?

I think what will likely happen is the PC, smartphone, and console markets will continue with respectable innards because a collaborative computing model between local and remote processors is still very much needed. However, I also think a meaningful evolution is going to happen in that there will be a major enhancement in computing experience, and users will be able to do much more than previously possible. I think the peripheral markets will have an even more expansive future thanks to a very robust computing-anywhere-for-everyone ecosystem; much more than today.

I will conclude by sharing this vision document for the Client-to-Cloud Revolution. It was co-written by Intel, Advanced Micro Devices, M2 Insights, and Maychirch. It speaks to the general criteria needed for this #clienttocloudrevolution, though it’s still in draft form and getting updated by industry. We are holding a formal review and discussion process during a private TIFCA meeting at SIGGRAPH on July 29 in Los Angeles (remote access possible). Non-media readers are encouraged to contact us at [email protected] if they want to participate.

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like